Virtana expands MCP Server to bring full-stack enterprise context to AI agents

Virtana announced the latest version of its Model Context Protocol (MCP) Server, bringing full-stack enterprise visibility directly to AI agents and LLMs so machines can understand enterprise operations as complete systems rather than isolated signals.

Opening the Virtana platform to a broad ecosystem of AI agents, automation systems, and large language models (LLMs), such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and Microsoft Copilot, enables AI to execute full-stack decisions across end-to-end enterprise environments, advancing observability from fragmented monitoring into autonomous, self-managing environments.

Built on Virtana’s patented full-stack optimization architecture, the platform powers a system dependency graph, a dynamic map that builds a structured understanding of how applications, services, infrastructure, and AI workloads interact across the enterprise.

Virtana delivers observability as infrastructure for AI-driven operations

MCP adoption is accelerating across enterprise infrastructure as the standard protocol for integrating AI into enterprise systems. However, observability has remained largely confined to fragmented, human-facing dashboards and APIs not designed for autonomous reasoning.

“The shift to AI-driven operations fundamentally changes what observability must deliver. It is no longer enough to surface signals; platforms must provide a structured understanding of the system itself,” said Amitkumar Rathi, Chief Product Officer at Virtana.

“Virtana builds a unified dependency graph that derives operational context across hybrid environments, and the MCP Server exposes that model as a standard interface for AI agents and LLMs. This enables a new operational paradigm where AI systems can analyze, prioritize, and act across the full stack based on real system relationships rather than isolated alerts,” Rathi added.

Unlike legacy observability architectures that divide monitoring across specialized domains such as infrastructure, networking, application performance, and cloud telemetry, Virtana normalizes operational telemetry into a unified system dependency graph. This dependency-aware representation of distributed applications is surfaced through MCP, enabling AI agents powered by leading large language models, including ChatGPT, Claude, and Gemini, to interact directly with structured operational context.

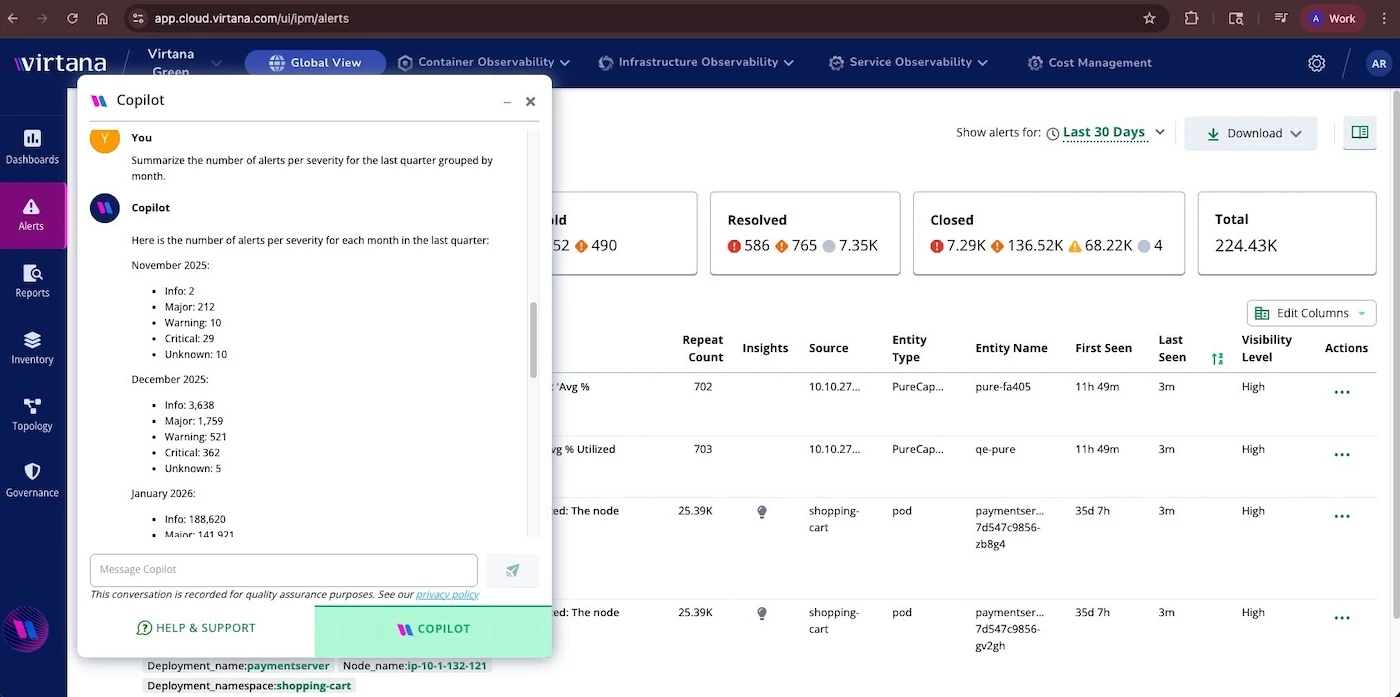

Rather than layering conversational interfaces onto legacy monitoring stacks, Virtana treats natural language as an expression of intent. Virtana’s MCP Server translates that intent into structured interactions with the dependency graph, allowing AI agents to retrieve grounded data, analyze relationships, and reason across the full system.

The Virtana MCP Server enables AI agents to:

- Query full-stack context in natural language: AI agents and leading LLMs, including ChatGPT, Claude, Gemini, Microsoft Copilot, or any compatible model, can ask “which services are affected by storage latency in region X” and receive structured responses that traverse infrastructure, orchestration, and application layers, without pre-built queries or domain expertise, enabling natural-language telemetry exploration across unified observability data.

- Autonomous root cause analysis and dependency reasoning: Virtana’s system dependency graph provides AI agents with live topology awareness, enabling autonomous correlation of signals, dependencies, and historical patterns to identify probable root cause and prioritize actions based on downstream impact rather than isolated symptoms.

- Analyze system behavior holistically: AI agents can correlate signals across network, infrastructure, and application layers to understand how distributed systems interact across hybrid and multi-cloud environments, eliminating visibility gaps created by fragmented observability tools through context-aware enrichment based on learned system behavior.

- Recommend optimizations based on dependency-aware understanding: Built on Virtana’s patented optimization architecture, AI agents can leverage dependency-aware insights to recommend intelligent actions grounded in real system structure rather than isolated metrics.

- Drive automation through open execution frameworks: Automation platforms such as Ansible, Terraform, and other orchestration tools can connect through Virtana’s MCP Server to execute workflows based on AI-generated insights and decisions.

With the Virtana MCP Server, AI agents understand enterprise-wide system dependencies, from infrastructure to applications, moving beyond reactive monitoring to deliver intelligent recommendations based on real operational context.