GPT needs to be rewired for security

LLMs and agentic systems already shine at everyday productivity, including transcribing and summarizing meetings, extracting action items, prioritizing critical emails, and even planning travel. But in the SOC (where mistakes have real cost), today’s models stumble on work that demands high precision and consistent execution across massive, real-time data streams. Until we close this reliability gap at scale, LLMs alone won’t automate the majority of SOC tasks.

Humans excel at framing ambiguous problems, making risk-aware judgments, and applying domain intuition, especially when signals are weak, conflicting, or novel.

Machines excel at processing high-volume, high-velocity, unstructured data with low marginal cost. They don’t tire, forget, or drift, an ideal complement for threat detection. However, triage has stayed human because machines (and LLMs) cannot truly substitute a human when it comes to context assembly, hypothesis generation, and business-risk judgment.

We’re still in the early innings of SOC automation. To keep pace as attackers weaponize AI-driven automation, we must reach an equilibrium where machines thwart most attacks at machine speed. Without that balance, defenders fall behind fast.

What breaks LLMs inside a SOC

To shift toward machine-speed defense, the platform must overcome the current limits of LLMs:

1. Real-time ingestion at scale

Continuous processing of fast-accumulating data (logs, EDR, email, cloud resources, identity, code, files, and more) without lag or loss.

2. Large, durable context

Retain and retrieve very large, long-lived knowledge (asset inventories, baselines, case history) to correctly interpret actions and sequences over time.

3. Low-latency, low-cost execution

Perform filtering, correlation, enrichment, and reasoning at the rate of incoming data, and do it at very low cost, so it scales with the enterprise.

4. Deterministic logic

Follow a chain of thought over large datasets to arrive at results that are repeatable, explainable, and understandable (not fickle or opaque).

5. Consistency of reasoning

Deliver calibrated, repeatable logic with a spread that matches the margin of disagreement between two humans, not the volatility of a generative model.

What fixes it: Rethinking the stack

The path forward isn’t “more prompts.” It’s a new type of model that solves these problems and enables LLMs to be suitable for the SOC use case.

Anybody who has done anything useful with AI/ML knows that models need high quality data. We must move beyond log-centric SIEMs, as high quality threat detections and investigations require a lot more data than what logs can provide. Consider a sensitive action, say, a change to file permissions, from an unusual location in a business-critical application. The event record isn’t enough; we need to know the file type, its labels, the role and permissions of the user who made the change, who created the file, and more.

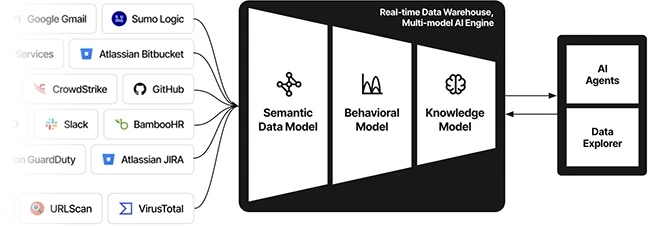

To enable that, we need a real-time data warehouse that ingests and correlates not just logs but also identities, configurations, code, files, and threat intelligence. On top of this warehouse, we should run an AI engine capable of processing all this real-time data with human-grade reasoning.

One approach for the AI engine will be to build a multi-model AI engine – a pipeline that combines semantic reasoning, behavioral analytics, and large language models. Semantic understanding and statistical ML handle the heavy lifting on high-volume data via low-latency pipelines, narrowing the slice that LLMs must correlate and reason over. Result: reliable reasoning at scale without blowing up latency or cost.

Another advantage of this approach is that while the real-time data warehouse is critical for continuous training of the AI engine to detect threats and triage alerts, it can also be used as a long-term warehouse for visibility and forensics – effectively replacing the legacy SIEMs with a much more modern data platform – welcoming us to the SIEMless future of an AI-driven SOC!

How will this change the SOC?

1. Threat detection (detection engineers)

In most organizations, this rare, specialized role evolves from writing brittle rules and tuning UEBA to designing adaptive systems. Instead of crafting detections for individual indicators or signatures, engineers steer AI-driven models that continuously correlate signals across logs, identities, configurations, and code repositories. The focus shifts from rule authoring to threat modeling and feedback loops that keep detections accurate over time.

2. Alert triage (SOC analysts / Tier-1 & 2)

Triage has long been dominated by repetitive enrichment, correlation, noise reduction, chasing the users in IT or DevOps for confirmation, etc. With our advanced AI engine plus human oversight, most of this work becomes automatable. Our triage bots (Exabots) work in tandem with human analysts to dramatically increase productivity. Over time, staffing models change: less dependence on large Tier-1/Tier-2 teams or outsourced MSSPs for 24/7 coverage, lower overall costs, and the ability to detect and triage many more alerts.

3. Threat hunters

Hunting is where human intuition matters most, but it’s often throttled by slow queries, fragmented tools, and incomplete data. With a modern data architecture and the AI engine above, hunters can query correlated, context-rich information in real time, assisted by automated agents that surface anomalies and assemble timelines. Instead of spending hours gathering evidence, hunters program agents to test hypotheses, run adversary emulation, and pursue weak signals creatively, shifting from reactive casework to proactive defense.

Where this goes next

We’re confident that modern data architecture plus an evolved AI model can overcome many of today’s LLM limitations and progressively reduce the human oversight needed to get dependable outcomes from agentic systems. This matters most for mid-size companies, who must deliver enterprise-grade security without enterprise-size budgets or headcount. Done right, machines democratize security, letting many organizations leapfrog legacy architectures and their constraints.

In short: LLMs are a breakthrough, but security needs a modified brain, one built for real-time, durable context, deterministic logic, and consistent reasoning at scale. That’s what we are working on at Exaforce.