Nudge Security expands platform with new AI governance capabilities

Nudge Security announced an expansion of its platform to address the need for organizations to mitigate AI data security risks while supporting workforce AI use.

New capabilities include:

- AI conversation monitoring: Detect sensitive data shared via file uploads and conversations with AI chatbots including ChatGPT, Gemini, Microsoft Copilot, and Perplexity

- Policy enforcement via the browser: Delivery of guardrails to employees as they interact with AI tools to educate and enforce the organization’s acceptable use policy

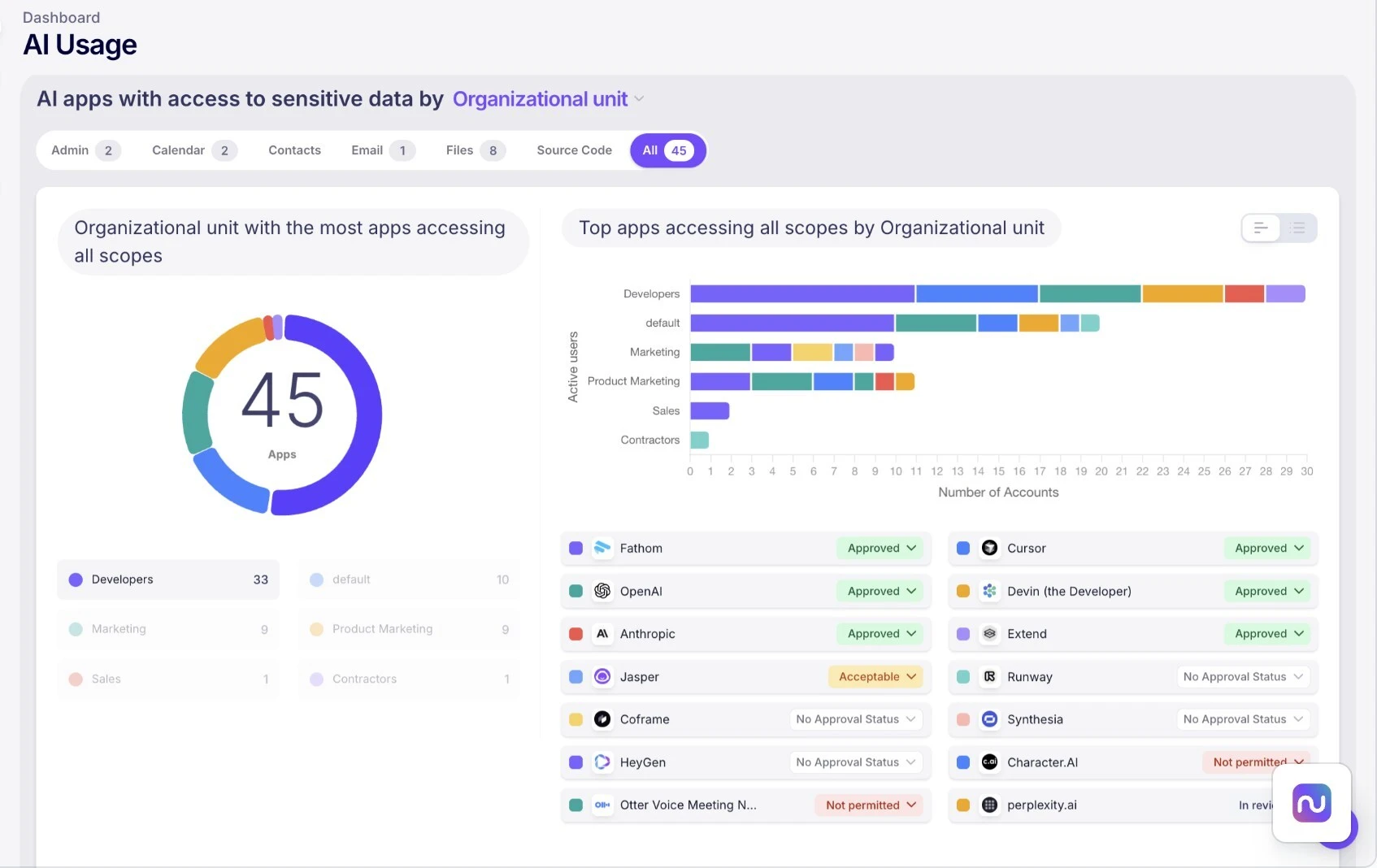

- AI usage monitoring: See trends of Daily Active Users (DAUs) by department, individual user, and specific AI tools (approved or unsanctioned) to quickly respond to business needs and potential risks

- Risky integration detection: Automated surfacing of data-sharing integrations and OAuth/API grants that provide AI tools access to sensitive corporate data

- Data training policy summaries: Condensed summaries of AI and SaaS vendors’ data training policies that surface how each vendor uses, retains, and handles data

- Playbooks to scale ongoing governance: Automated workflows simplify tracking Acceptable Use Policy (AUP) acknowledgements, revoking risky data-sharing permissions, orchestrating account removals, and more

These capabilities build on the AI security and governance functions in Nudge Security since 2023, including Day One discovery of all AI apps, users, and integrations, visibility into AI dependencies in the SaaS supply chain, and security profiles for thousands of AI providers. Nudge Security helps customers advance AI projects while maintaining security controls and policy compliance.

“As part of Notion’s commitment to secure AI adoption, we’ve built a governance framework that requires visibility into the tools our teams explore. Nudge Security provides this visibility and gives our compliance and legal teams aggregated data on emerging AI tools, which we then evaluate against our established privacy, security, and compliance requirements,” said JJ Macias, IT Systems Engineering Manager at Notion.

AI risks extend across the saas ecosystem

The explosion of AI, particularly AI embedded throughout the SaaS ecosystem, has introduced security challenges as organizations now grapple with hundreds of AI-enabled applications, complex networks of integrations, and non-human identities with access to sensitive data across their environment.

AI usage data from Nudge Security finds:

- Over 1,500 unique AI tools discovered across a variety of organizations

- An average of 39 unique AI tools per organization

- More than 50% of SaaS apps have a major LLM provider in their data subprocessor list

- An average of 70 OAuth grants per employee, many of which enable data sharing

Nudge Security is an AI security solution that provides visibility and control across the SaaS ecosystem, not only AI tools. This approach reflects the reality that AI data security risks appear wherever AI interacts with SaaS, from AI features in productivity apps to MCP server integrations that create direct pipelines to AI models to OAuth grants that allow ongoing data access long after they are first authorized.

“The risk isn’t just in the AI tool itself – it’s in the access pathways employees create without considering the security implications,” said Jaime Blasco, CTO of Nudge Security. “A single OAuth grant can give an AI vendor continuous access to your organization’s most sensitive data. Nudge Security makes these integrations visible and manageable for the first time.”

Nudge Security has built a solution that engages the workforce as active participants in the governance process and delivers guardrails at the point of risk, when and where employees make decisions that impact the security posture of their organization.