EU’s Chat Control could put government monitoring inside robots

Cybersecurity debates around surveillance usually stay inside screens. A new academic study argues that this boundary no longer holds when communication laws extend into robots that speak, listen, and move among people.

Researchers Neziha Akalin and Alberto Giaretta examine the European Union’s proposed Chat Control regulation and its unintended impact on human robot interaction.

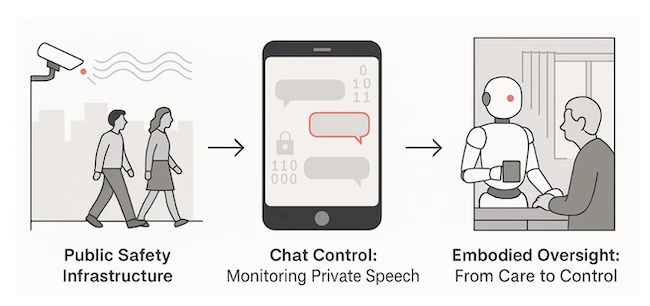

The continuum of surveillance, from watching public spaces, to listening in private communications, to acting within embodied environments.

A brief look at the Chat Control proposal

The EU Chat Control proposal aims to combat online child sexual abuse. Early versions allowed authorities to require communication providers to scan messages, including encrypted content. The framework covers text, images, and video. After heavy criticism, the Council revised the proposal in late 2025. Explicit scanning mandates were removed, and the system shifted toward risk assessments and mitigation duties.

The authors argue that this change preserves strong incentives to monitor. Providers remain responsible for identifying and reducing risk. Residual risk cannot reach zero because detection systems produce errors. That creates a continuing obligation to adjust controls, which encourages broad monitoring to demonstrate compliance.

More than 800 security and privacy experts have warned that such measures weaken encryption and function as backdoors. Prior research cited in the paper shows that client side scanning expands surveillance while failing to stop abuse at scale.

Robots fall inside the legal definition

The paper’s central finding focuses on how EU law defines interpersonal communication services. The definition includes any service that enables direct interactive exchange of information over a network. Robots designed to mediate communication fit this description.

Social robots, care robots, and telepresence robots transmit voice, video, gestures, and contextual signals between people. The study gives concrete examples. Classroom robots used by sick children enable remote participation and emotional expression. Care robots in homes and hospitals support conversations between patients, families, and clinicians.

Once these systems qualify as communication services, they enter the scope of Chat Control. Providers may feel compelled to assess risk and introduce detection mechanisms inside robots themselves. Monitoring moves from software platforms into embodied systems present in private spaces.

Monitoring changes the threat model

From a cybersecurity perspective, the authors describe this shift as consequential. Monitoring systems designed for safety become permanent components of robot architecture. Microphones, cameras, behavior logs, and AI models feed detection pipelines that store and analyze intimate data.

Each additional pipeline increases exposure. Attackers gain new points of entry, including firmware interfaces, cloud storage, and machine learning models. Legally required access paths cannot reliably distinguish authorized from hostile use. Keys leak. Interfaces fail. Models can be manipulated.

The study describes this dynamic as safety through insecurity. Systems introduced to protect users increase the likelihood of exploitation.

Data backdoors enable inference attacks

The paper details how surveillance data collected from robots can support advanced attacks. Model inversion attacks can reconstruct approximations of private training data. Membership inference attacks can reveal whether a person’s data contributed to a model, exposing private information.

Robots amplify this risk because they operate in emotionally and physically vulnerable contexts. Care robots record routines, reactions, and health related behavior. Telepresence robots capture classrooms and family conversations. When these data streams feed centralized analysis, attackers gain leverage far beyond message interception.

Decentralized approaches like federated learning receive brief discussion. The authors note that these techniques reduce data aggregation while introducing new attack classes. Technical mitigation alone does not resolve the structural risks created by mandated monitoring.

Control backdoors reach physical systems

Beyond data exposure, the study highlights control risks. Robots rely on remote management for updates, diagnostics, and support. Hidden access mechanisms already exist in some commercial platforms. Regulatory pressure to monitor provides justification to normalize such access.

The paper references recent findings of hardcoded keys in commercial robots. Once attackers gain access, they can issue commands, manipulate sensors, or alter decision logic. For robots that interact physically with people, compromise carries direct safety implications.

AI driven robots add another layer of risk. LLMs embedded in robots can be triggered through specific prompts or contexts. Research cited in the study shows that backdoor triggers can redirect behavior without visible signs. Language becomes a pathway to control.

Trust erodes in everyday settings

The authors stress that human robot interaction depends on trust. Robots used in elder care, therapy, and education operate through perceived companionship and support. Continuous monitoring alters that relationship.

When every interaction feeds risk analysis, robots become observers and reporters by design. The study links this shift to reduced user autonomy and acceptance. People adapt behavior when surveillance becomes ambient, especially in intimate environments.

Rules should push for transparency, keep processing on the device whenever possible, and require strong oversight so privacy stays protected. Research in human–robot interaction also needs to keep pressure on these questions, because laws and technical choices shape how people experience and trust robots.