Cyera launches AI Guardian to secure all types of AI systems

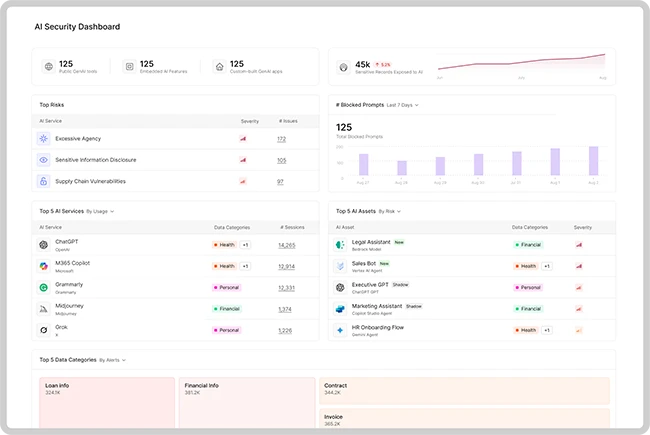

Cyera launched AI Guardian, a solution built to secure any type of AI. It expands Cyera’s platform to meet the needs of enterprises adopting AI at scale, anchored by two core products: AI-SPM, providing inventory on all AI assets at a granular level, and AI Runtime Protection, monitoring and responding to AI data risks in real-time.

The launch comes as enterprises scale AI initiatives while facing new security and operational risks. According to Forrester, enterprises are expanding their use of AI, with 61% using genAI or genAI and predictive AI. In the last 12 months, 23% of enterprises have seen an increase in AI-based cyberattacks with 20% also having innovation halted or slowed due to unforeseen AI risk.

“Data is the heart and soul of AI—secure it, and enterprises can keep pushing boundaries without losing control or risking exposure,” said Yotam Segev, CEO of Cyera. “That’s why we built AI Guardian on the same principles as our Data Security Platform – we’re going beyond surface level visibility and the basic question of ‘what AI is being used’ to uncovering who has access and what data is being used. With this level of protection every business can move AI forward with clarity, confidence, and control.”

AI-SPM and AI Runtime Protection work in tandem with Cyera’s DSPM and OmniDLP products (respectively), giving enterprises full visibility into their AI risks, providing:

- Broad coverage: discover and manage risk across all types of AI systems – whether public (e.g., ChatGPT), embedded in SaaS (e.g., Microsoft Copilot), or homegrown (e.g., LLMs built on Amazon Bedrock)

- Deep visibility: see not just which AI models are in use, but exactly what sensitive data they can access, and by which users, applications, or agents

- Real-time detection & response: act fast, with precise, low-noise insights; automatically detect and block prompt injection, data misuse, and unauthorized access as it happens

- Proactive compliance readiness: align with regulations and compliance frameworks, including the EU AI Act and US executive orders, with policy enforcement and auditability