Your photo could be all AI needs to clone your voice

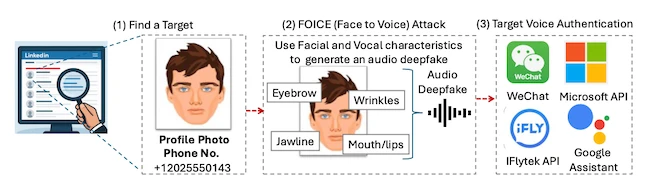

A photo of someone’s face may be all an attacker needs to create a convincing synthetic voice. A new study from Australia’s national science agency explores this possibility, testing how well deepfake detectors perform against FOICE (Face-to-Voice), an attack attack method that generates speech from photos.

Illustration of face to voice deepfake

From faces to voices

A new technique is changing how voice deepfakes are made. Instead of using text or a voice sample, it can generate speech from a photo by estimating how a person might sound based on their face. The system learns connections between appearance and vocal traits such as pitch and tone, then produces speech in that voice.

Because photos are much easier to find online than voice recordings, this approach makes impersonation easier to scale. In tests, it fooled WeChat Voiceprint, a voice-based login feature used for account authentication, about 30 percent of the time on the first attempt and nearly 100 percent after several tries.

Since the generated audio lacks the patterns that deepfake detection models are trained to catch, most of those systems failed to recognize it as fake.

Testing current detectors

To measure detector performance, the researchers built a dataset of genuine and synthetic speech and tested each system under three listening conditions: clean, noisy, and denoised audio. They included both FOICE-generated and text-to-speech samples to see how detectors handled familiar and unfamiliar methods.

Each was first tested in its original state to set a baseline, then retrained using FOICE-generated examples. This showed how targeted fine-tuning affected performance and whether it caused a drop in accuracy on other fake types.

Thousands of samples, balanced between real and synthetic voices, were used to mimic practical situations like phone calls and online meetings.

Detectors stumble on new AI voice attacks

The researchers tested several popular voice deepfake detectors to see how they handled audio created with FOICE. They first measured how each system performed on its own, then retrained them with examples of FOICE-generated voices to see if that helped.

At baseline, all detectors struggled. The systems that work well on traditional text-to-speech or voice-conversion deepfakes failed to recognize audio made from photos. Some marked genuine recordings as fake, while others missed most fakes. The issue is that these tools were designed to spot traces left by older synthesis methods, and FOICE does not create those same patterns.

After retraining with FOICE samples, accuracy improved. Several models performed almost perfectly on those fakes. But when the same detectors were tested on another synthesis method, their performance dropped again. One model fell from nearly 40 percent accuracy to below 4 percent. Only one system stayed consistent across both tests.

The results point to a limitation. Training detectors to catch one kind of fake can make them worse at catching others. Fine-tuning works for quick adaptation but risks overfitting, which can make systems too narrow and less flexible when new deepfake tools appear.

Evaluation scope and limitations

The authors emphasized that their tests don’t cover every possible attack scenario, but the trends point to a wider problem across voice authentication and detection. They concluded that the industry needs broader datasets, new training regimes, and models capable of identifying synthetic voices generated from non-traditional inputs.

They also called for expanded testing across multiple generators and real-world environments. Future studies should explore how detectors perform with different vocoders, device conditions, and video-based conditioning. The goal, they said, is to move beyond reactive fine-tuning and toward proactive defenses that can recognize novel synthesis patterns before they appear in the wild.