Impart enables safe, in-app enforcement against AI-powered bots

Impart Security has launched Programmable Bot Protection, a runtime approach to bot defense that brings detection and enforcement together within the application. Impart makes enforcement operational by enabling teams to see what would be blocked before turning it on.

Bot protection split detection and enforcement across two tools that were never designed to work together. Web Application Firewalls (WAFs) attempted bot detection at the edge but lacked behavioral intelligence. Dedicated bot vendors improved detection with browser fingerprinting but relied on the WAF for enforcement, leaving teams stuck in monitor mode even with better signals.

Meanwhile, AI-powered bots have rendered both approaches inadequate. They use real browsers, maintain legitimate-looking sessions, and are indistinguishable from real users in production traffic. The result is a multi-billion-dollar industry that can detect suspicious behavior but cannot safely enforce against it.

“Most companies pay for bot protection they never fully turn on. That’s not a technology problem, it’s a trust problem. We solved it by letting teams see exactly what would happen before anything is enforced. When you can prove a block is safe using your own production data, enforcement stops being scary and starts being operational,” said Jonathan DiVincenzo, CEO of Impart.

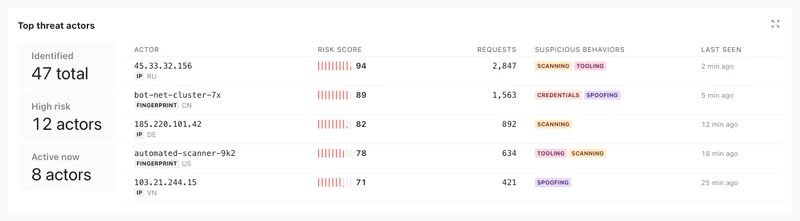

Programmable Bot Protection runs inline, inside the live request path, evaluating behavior rather than relying on headers, IP reputation, or device fingerprints. By correlating activity across sessions, identities, and time, Impart detects all 21 OWASP automated threat categories, including credential stuffing, carding, inventory denial, and account-creation abuse, that evade perimeter-based controls.

Impart runs inside the application without adding latency or requiring agents. The platform closes the enforcement gap through three capabilities:

- Runtime behavioral detection that evaluates intent inside the application, not at the edge, identifying patterns AI-powered bots cannot fake because they exist at the application layer

- Shadow mode validation to observe exactly what would be blocked using real production traffic, then enforce inline with a complete audit trail for every decision

- Programmable policies as code, version-controlled in Git, deployed through CI/CD, and rolled back in seconds, giving teams full ownership of enforcement logic without vendor tickets or black-box decisions

Before a single request is enforced, teams run Programmable Bot Protection in shadow mode against live production traffic. Nothing is touched, but everything that would be blocked is logged with full context. Teams validate accuracy against their own data, their own edge cases, and their own peak traffic patterns. When they turn enforcement on, it is a decision backed by evidence from their own production environment.

“The bot protection market accepted that enforcement in production was too risky. Impart proved it doesn’t have to be,” said Karan Mehandru, Managing Director at Madrona. “As AI-driven attacks accelerate, the teams that can safely enforce will separate from the teams that are still watching dashboards.”