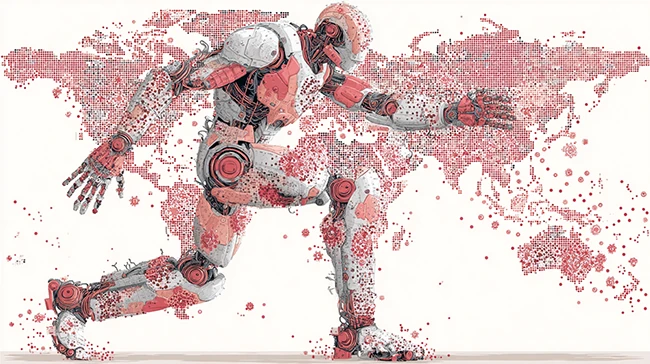

Deepfakes are rewriting the rules of geopolitics

Deception and media manipulation have always been part of warfare, but AI has taken them to a new level. Entrust reports that deepfakes were created every five minutes in 2024, while the European Parliament estimates that 8 million will circulate across the EU this year.

Technologies are capable of destabilizing a country without a single shot being fired. Humans respond faster to bad news and are more likely to spread it. On top of that, they are very bad at detecting fake information. The anti-immigrant riots in the UK show just how fast false claims on social media can spin out of control and turn into violence.

Fake videos of leaders making false statements, doctored audio instructions, and manipulated images can shake governments or shape public opinion. Businesses aren’t safe either. False announcements or fake board statements can affect stock prices and investor confidence.

Some social media platforms have cut back on content moderation, making it even easier for this material to go viral.

The use of deepfakes in geopolitics

They say whoever controls information controls the narrative. In the past, TV and newspapers shaped public perception, but today social networks reach far more people. The real power of deepfakes comes from polluting the media space and sowing distrust. When people stop trusting what they see, their opinions can be shaped or manipulated.

In recent years, authoritarian regimes have been interfering more in democratic elections and spreading false news. Russia, in particular, is frequently accused of attempting to influence elections worldwide. This has fueled widespread concern, as many fear deepfakes and voice clones could undermine the integrity of elections and even change the face of election campaigns.

Just before Slovakia’s election, a fake audio clip surfaced allegedly showing a candidate discussing electoral fraud. Although denied, the clip went viral, and its release during the election’s silence period may have influenced the outcome in favor of another candidate.

Alongside election interference, deepfakes are also being used in armed conflicts around the world. Conflicts in the Middle East, in Ukraine, and the recent brief war between India and Pakistan have also produced numerous deepfakes, used by all sides involved.

At the beginning of Russia’s invasion of Ukraine, a deepfake video of President Volodymyr Zelensky appeared, in which he demanded the surrender of his troops.

This could become a model for future wars, where fighting will no longer be done just with bullets and bombs.

Impact on organizations and businesses

Competitors or adversaries can target companies that are strategically important, creating doubt about their products or services. A fake video of a high-level executive claiming a product isn’t safe can spread online, shaking customer trust and investor confidence. Stock prices can drop, partners may question contracts, and supply chains can be disrupted.

Eight out of ten executives are worried about how AI-driven false information could hurt their business.

Even if a fabricated video gets debunked, the damage is often done. It takes time for people to accept the truth, and disinformation campaigns can continue to push the idea that the video was real and authorities are hiding it.

Global efforts to regulate deepfakes

Countries are starting to tackle the growing deepfake problem with new laws and regulations. The EU AI Act lays out rules for using AI safely and transparently, including how to handle risks from AI-generated content. Following this approach, Denmark is updating its copyright laws to restrict the creation and sharing of deepfakes, giving people control over their own image and voice.

In the United States, the Take It Down Act requires harmful deepfakes to be removed within 48 hours and imposes federal penalties for anyone who distributes them.

“Governments and international organizations play a crucial role in combating AI fraud through regulation, policy formulation, enforcement, and international cooperation,” said Andrius Popovas, Chief Risk Officer at Mano Bank.

Fighting deepfakes with AI

Paradoxically, the solution in the fight against deepfakes lies in AI models. Advanced AI systems can look at patterns, language, and context to help with moderating content, checking facts, and spotting false information. But we also need to be aware of its limitations.

Detecting deepfakes is much harder than making them because it needs large datasets of real and fake content, which take a lot of human work to label. Politicians and celebrities are easier to detect since there is plenty of data on them, but ordinary people are harder to protect. Many detection systems also struggle to handle new types of deepfakes. They often work well on known methods but fail when faced with different generation techniques.