AI-generated images have a problem of credibility, not creativity

GenAI simplifies image creation, yet it creates hard problems around intellectual property, authenticity, and accountability. Researchers at Queen’s University in Canada examined watermarking as a way to tag AI images so origin and integrity can be checked.

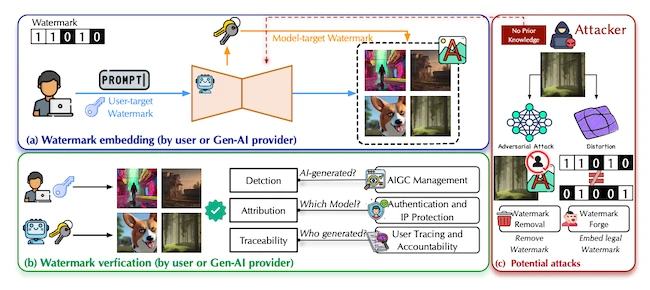

Watermarking scenario overview for GenAI

Building the framework

A watermarking system operates as a complete security process with several essential parts: embedding, verification, attack channels, and detection. It starts when a coded message is placed inside an image either during or after generation. That signal can later be extracted and matched to a key to confirm where the image originated.

Some marks are added after an image is finished, while others are embedded during generation. When inserted within the model itself, the mark becomes part of the image creation process and has a better chance of surviving compression, cropping, or other minor edits that often destroy external markers.

Each system relies on cryptographic keys, embedding procedures, and statistical checks. The watermark must be invisible to viewers but readable to authorized users. It should stay intact through ordinary image handling while staying private enough that no one can duplicate it.

The survey notes that viewing watermarking as a defined security process allows it to be tested and improved much like encryption or authentication systems. That structure gives researchers a common language to measure reliability and traceability.

Comparing the main techniques

Watermarking began with signal-processing methods that changed pixel values or frequency coefficients using transforms such as discrete cosine or wavelet analysis. These techniques were simple to apply but did not match the complex ways that generative models create images.

The rise of deep learning introduced new possibilities. Encoder–decoder networks like HiDDeN, RivaGAN, and StegaStamp learned how to hide and retrieve marks automatically. Training balanced invisibility and recovery, sometimes with adversarial learning to withstand noise or blur.

Diffusion models such as Stable Diffusion and Midjourney brought another shift. Researchers started embedding marks directly inside these systems, producing two main approaches: fine-tuning-based and initial noise-based.

Fine-tuning methods, such as Stable Signature and WOUAF, modify sections of a diffusion model so every picture it produces carries an identifying code. The code can point to the model creator or to a particular user. This guards intellectual ownership but demands retraining large models and consumes substantial computing resources.

Initial noise-based methods alter the random noise that diffusion models use as a starting point. Every image begins from that noise, so adjusting it lets a watermark travel through the entire generation path. TreeRing, RingID, and PRC are leading examples. TreeRing embeds circular patterns in the frequency domain, while PRC uses a cryptographic code that looks identical to natural noise. These approaches are faster to apply and can carry more information, although they introduce new weaknesses.

Testing the systems

Researchers use three main criteria to evaluate watermarking: visual quality, capacity, and detectability.

Visual quality checks that the mark does not degrade the picture. Metrics such as Structural Similarity Index (SSIM) and Fréchet Inception Distance (FID) are commonly used. A sound watermark should remain invisible and should not alter the look or message of the image.

Capacity measures how much data can be stored. Older techniques could hide only a few bits, while methods such as PRC can embed up to 2,500 bits. This allows identification codes, time data, or policy tags, but the more information stored, the greater the chance that the mark might be noticed or altered.

Detectability refers to how reliably a watermark can be recovered after changes or attacks. Systems must avoid false positives and false negatives. Even a small error rate can cause serious problems when vast image libraries are scanned. Correctly setting detection thresholds is essential to prevent wrong classifications.

Exposing the weak points

Many watermarking schemes remain fragile under pressure. Attackers can crop, compress, or regenerate an image through another model to remove the hidden pattern. Others can forge marks to claim ownership or lend false credibility.

The authors divide these threats into two groups: resilience and security. Resilience measures whether a mark survives unintentional distortions such as compression or random noise. Security deals with targeted actions like forging or stealing keys. If a mark can be copied or reused, its value disappears.

New attack strategies take advantage of how diffusion models work. Regeneration attacks recreate nearly identical images with no mark by running them back through the model. Detector-aware attacks use small pixel changes to fool verification tools while keeping the picture visually identical. These tactics echo adversarial techniques seen in other areas of machine learning security.

Defensive ideas include encrypting watermark keys, varying where marks are placed, and training models to recognize and preserve watermarks during generation. These measures aim to keep the mark intact even when attackers adapt their methods.

Global momentum builds for watermarked AI content

Watermarking is drawing interest from both governments and private companies. China already requires marks on AI-generated material. The EU’s AI Act includes transparency rules that reference machine-readable markings.

In the US, federal guidance from the White House and the National Institute of Standards and Technology supports voluntary use. Major firms are experimenting as well. Google’s SynthID embeds invisible marks during image creation, while OpenAI promotes the C2PA framework for shared metadata across tools.