Faster LLM tool routing comes with new security considerations

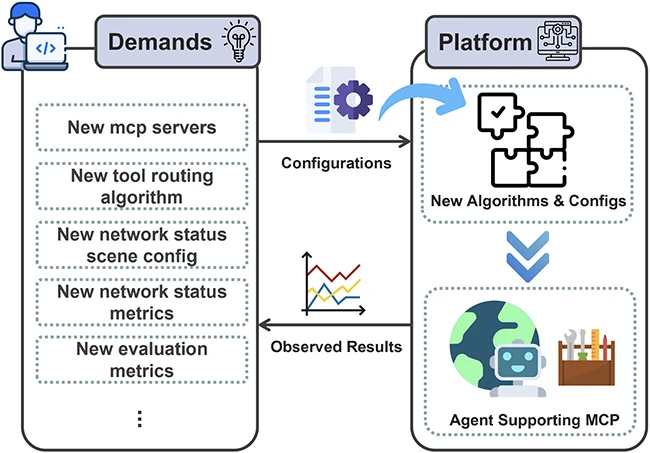

Large language models depend on outside tools to perform real-world tasks, but connecting them to those tools often slows them down or causes failures. A new study from the University of Hong Kong proposes a way to fix that. The research team developed a platform called NetMCP that adds network awareness to the Model Context Protocol (MCP), which is the interface that lets LLMs connect to external tools and data sources.

The research focuses on improving how LLMs choose which external servers or tools to use. It introduces a new routing algorithm that accounts for semantic relevance and network performance. The goal is to make LLMs faster, more reliable, and better suited for large-scale environments where latency and outages are common.

Moving beyond semantic matching

MCP systems route tool requests based only on semantic similarity between a user query and the tool description. That works well in theory, but it ignores the actual condition of the network. The most relevant tool might sit on a server that is slow or unavailable, which causes delays or failures.

The research team points out that this limitation affects production environments where dozens or hundreds of MCP servers run under varying network loads. Their proposed solution integrates network awareness into the routing process so that LLMs can consider both what a tool does and how well it performs in real time.

The design of the NetMCP platform

NetMCP serves as a testbed for this idea. It provides a controlled environment with five simulated network states, ranging from near-ideal conditions to high latency, frequent outages, and fluctuating connections. This lets researchers test routing algorithms under realistic network behavior instead of assuming perfect connectivity.

Colin Constable, CTO at Atsign, said this type of performance-driven design can come with a trade-off. “Platforms like NetMCP, by incorporating network metrics such as latency and load into LLM agent tool routing, fundamentally expand the attack surface,” he explained. “This architectural decision introduces a critical trade-off: pursuing performance optimization by trusting unverified network telemetry sacrifices security rigor.”

The SONAR algorithm

At the center of the platform is a new algorithm called Semantic-Oriented and Network-Aware Routing, or SONAR. It combines semantic matching with continuous monitoring of network health. Each MCP server is scored based on two factors: how relevant its tools are to the user’s request and how stable the server’s network connection is.

The algorithm tracks several network quality metrics, including latency, availability, and jitter. It also uses historical data to predict how servers are likely to behave. If a server’s latency climbs above a certain threshold, it is treated as offline. The algorithm then avoids routing new tasks to that server until conditions improve.

By balancing these metrics, SONAR can adapt to changing network conditions while still selecting tools that best match the task. The paper describes three operational modes: quality-priority, latency-sensitive, and balanced. These modes allow different weightings between semantic accuracy and network performance, depending on the application.

Constable noted that this balance also creates new security complexities. “The SONAR algorithm’s requirement for both semantic similarity and network health creates a redundant success pathway for attackers,” he said. “An attacker can achieve tool hijacking by compounding two simultaneous attacks: semantic manipulation through malicious input and network metric spoofing that tricks the system into selecting a compromised endpoint.”

Building a realistic test platform

NetMCP was designed as a modular system to support experimentation. It includes five components: MCP servers, a network status environment, an agent that handles queries, a routing algorithm module, and an evaluation module. The platform can run both live and simulated tests.

In live mode, the system connects to actual MCP servers such as Exa, DuckDuckGo, and Brave. In simulation mode, it reproduces the same conditions without external dependencies. This setup allows researchers to run repeatable tests and isolate the impact of network behavior on performance.

The network environment generator can create detailed latency profiles for each server. It can simulate fluctuating connections using sinusoidal patterns or random outages with controlled duration and probability. These test conditions allow for side-by-side comparisons of different routing strategies.

Results under different network conditions

The researchers compared SONAR against three existing approaches: a baseline retrieval-augmented generation (RAG) method, a reranked version that adds LLM scoring, and a prediction-enhanced version called PRAG.

Under ideal network conditions, all algorithms performed similarly in accuracy. However, RerankRAG introduced delays of over 20 seconds per query, while SONAR and PRAG stayed below two seconds.

The advantage of SONAR became more visible when the network was unstable. In hybrid and fluctuating scenarios, PRAG’s failure rate reached about 90 percent, while SONAR avoided all failures. Average latency dropped from around 900 milliseconds to about 22 milliseconds, a significant improvement in responsiveness.

Even when every server in the system experienced periodic fluctuations, SONAR maintained a task success rate of 93 percent and reduced average latency by 74 percent compared to PRAG. These results show that network-aware routing can make a measurable difference in real-world LLM performance.

Security and resilience concerns

While the technical gains are significant, experts say the design also opens potential avenues for exploitation. Constable warned that adversaries could spoof network health metrics to influence routing behavior. “Attackers can manipulate the routing engine, misdirect the agent’s query, and potentially exfiltrate sensitive data to an attacker-controlled endpoint,” he said. “For denial-of-service attacks, an attacker could spoof severe congestion for a legitimate tool, forcing the agent to divert traffic to a vulnerable alternative.”

He suggested that organizations experimenting with MCP-based systems adopt Zero Trust AI principles and enforce cryptographic provenance for any network telemetry used in routing decisions. “Without verification, network health data becomes a point of control for attackers,” Constable said.

Next steps for the project

The team plans to expand NetMCP to support additional LLMs and explore reinforcement learning as a way to improve decision-making in the routing process. Future tests will include distributed deployments across different geographic regions to validate how well the system performs outside the lab.

If the approach scales as expected, it could mark a step in improving how large models connect to external systems, reducing latency and increasing reliability for enterprise-level applications. At the same time, the addition of network telemetry means developers will need to weigh performance benefits against the new security challenges that come with it.