Gen AI data violations more than double

Security teams track activity that moves well beyond traditional SaaS platforms, with employees interacting daily with generative AI tools, personal cloud services, and automated systems that exchange data without direct human input. These patterns shape how sensitive information moves across corporate environments and where security controls apply.

The Cloud and Threat Report 2026 from Netskope examines this shifting activity through telemetry collected from enterprise cloud traffic over the past year. The report documents changes in how users access applications, share data, and encounter threats across web and cloud channels. Its findings outline where data exposure, phishing activity, and automated processes intersect in day to day operations, offering security practitioners a view of how cloud risk shows up in practical terms.

Unauthorized cloud services and personal applications

Researchers highlight the ongoing risk from personal cloud service usage and unsanctioned applications. Users within many organizations interact with cloud services outside of sanctioned enterprise platforms. This activity includes use of personal storage tools and other consumer-focused cloud software that lacks enterprise governance. These interactions produce data policy violations that are challenging for security teams to detect without comprehensive monitoring.

The analysis emphasizes that teams must map where sensitive information travels, including through personal app usage. Security professionals are advised to implement controls that log and manage user activity across all cloud services. Tracking data movement and applying consistent policy across managed and unmanaged services are identified as key steps to reducing exposure risks.

Phishing and persistent threat tactics

Phishing remains among the top threat vectors for attempts to compromise credentials or introduce payloads into enterprise systems. The report reviews data showing that phishing campaigns remain frequent and continue to target cloud-based credentials. Adversaries use email and messaging channels to deliver links that direct users to malicious sites or to capture login data for widely used productivity suites.

Alongside phishing, malware continues to arrive through channels that use trusted cloud services. Attackers embed harmful files within cloud storage links or compromise legitimate cloud accounts to distribute malicious software. Analysis in the report shows that defenders must treat cloud platforms as both delivery mechanisms for legitimate data and potential avenues for threat propagation.

The report suggests that defenders apply threat detection methods that include analysis of user interaction patterns, file types, and destinations. Correlating unusual activity with known threat signatures can help reduce successful phishing or malware campaigns.

Agentic AI and emerging risk categories

A notable portion of the report covers agentic AI. This category includes systems and tools that take actions based on defined goals with limited human direction. Enterprise experimentation with agentic AI has increased. These tools interact with APIs and systems without direct human input for each action. This behavior introduces risk because automated processes may transfer or expose data in ways that evade traditional human-centric controls.

Security teams are advised to incorporate agentic AI monitoring into their risk assessments. This includes mapping the tasks these systems perform and ensuring they operate within approved governance frameworks. The report notes that unattended AI activity must be visible in logs and policy engines to ensure adherence to data protection standards.

Recommendations for enterprise defenders

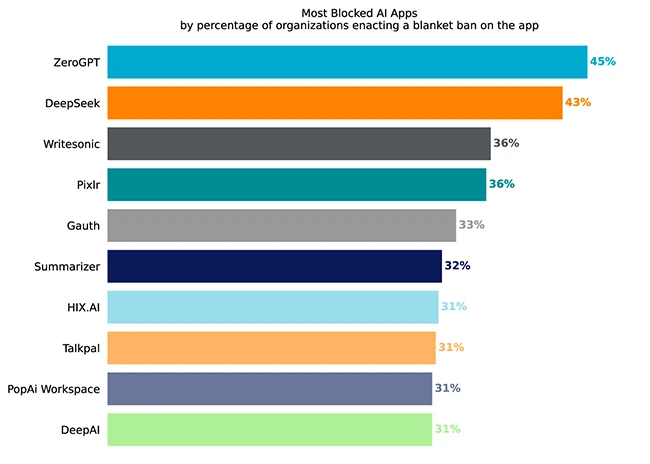

Netskope outlines a series of recommendations for security practitioners. The first item involves gaining visibility into unsanctioned applications and genAI tools. Teams should catalogue applications in use and assess their interaction with corporate data. Software that scans traffic and enforces data policies across cloud services is described as a fundamental enabler of risk reduction.

Second, the report encourages adoption of data loss prevention methods that cover cloud and AI services. This includes context-aware policies that trigger alerts when sensitive content is posted or uploaded to external platforms. Logging and alerting across all web and cloud transactions are treated as prerequisites for timely response.

Third, defenders are urged to enhance phishing defenses. The report points to combined use of user training, URL analysis, and credential monitoring to lower the success rate of social engineering campaigns. These measures provide layers of assurance that reduce the chance of compromised credentials and subsequent cloud account misuse.

“Enterprise security teams exist in a constant state of change and new risks as organisations evolve and adversaries innovate,” said Ray Canzanese, Director of Netskope Threat Labs. “However, genAI adoption has shifted the goal posts. It represents a risk profile that has taken many teams by surprise in its scope and complexity, so much so that it feels like they are struggling to keep pace and losing sight of some security basics. Security teams need to expand their security posture to be ‘AI-Aware’, evolving policy and expanding the scope of existing tools like DLP, to foster a balance between innovation and security at all levels.”