Agentic AI edges closer to everyday production use

Many security and operations teams now spend less time asking whether agentic AI belongs in production and more time working out how to run it safely at scale. A new Dynatrace research report looks at how large organizations are moving agentic AI from pilots into live environments and where those efforts are stalling.

The report shows agentic AI already embedded in core operational functions, including IT operations, cybersecurity, data processing, and customer support. 70% of respondents say they use AI agents in IT operations and system monitoring, with nearly half running agentic AI across both internal and external use cases.

Budgets reflect that momentum. Most respondents expect spending on agentic AI to increase over the next year, with many organizations already investing between $2 million and $5 million annually. Funding levels track closely with use cases tied to reliability and operational performance.

From pilots to limited production

Agentic AI adoption remains uneven, though progress is visible. Half of surveyed organizations report agentic AI projects running in production for limited use cases, and another 44% say projects are broadly adopted within select departments. Most teams operate between two and ten active agentic AI projects.

IT operations, cybersecurity, and data processing lead in production readiness. About half of projects in these areas are either live or in the process of being operationalized.

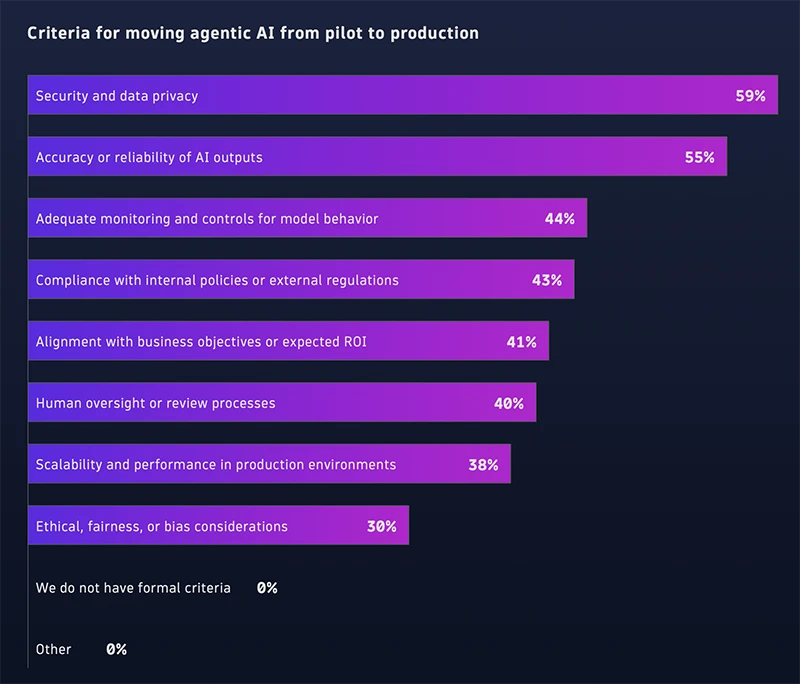

Criteria for moving projects forward center on technical performance. Security and data privacy rank highest, followed by accuracy and reliability of AI outputs. Monitoring and control mechanisms also play a central role, with many teams treating observability as a prerequisite for broader rollout.

Observability gaps slow progress

Technical barriers remain common. Most cite security, privacy, or compliance concerns as blockers. A similar share report difficulty managing and monitoring agents at scale. Limited visibility into agent behavior and challenges tracing downstream effects of autonomous actions appear frequently across regions and industries.

These issues become more pronounced as systems grow more interconnected. Agentic AI systems often coordinate across multiple tools, models, and data sources, which increases the need for real-time insight into decisions and execution paths. Without that visibility, teams struggle to diagnose unexpected behavior or link technical signals to business outcomes.

The report highlights observability as a foundational control layer. Nearly 70% of respondents already use observability tools during agentic AI implementation, and more than half rely on them during development and operational phases. Common uses include monitoring training data quality, detecting anomalies in real time, validating outputs, and ensuring compliance.

Humans remain part of the loop

Despite rising levels of autonomy, human oversight remains a standard practice. More than two thirds of agentic AI decisions are currently verified by a person. Data quality checks, human review of outputs, and monitoring for drift rank as the most widely used validation methods.

Only a small share of organizations build fully autonomous agents without supervision. Most teams develop a mix of autonomous and human-supervised agents, depending on the task and risk profile. Business-oriented applications tend to include higher levels of human involvement than infrastructure-focused use cases.

Measuring success through reliability

When organizations assess agentic AI outcomes, reliability and resilience stand out. 60% of respondents say technical performance is their top success metric. Operational efficiency, developer productivity, and customer satisfaction also rank highly.

Monitoring methods remain mixed. About half rely on logs, metrics, and traces, and nearly half still review agent-to-agent communication flows manually. Automated anomaly detection and dashboards appear frequently, though many teams continue to combine automated and manual approaches.

Respondents describe success in terms of systems that maintain performance under stress and recover quickly from faults. Given the speed at which errors can propagate across interconnected agents, early detection and rapid response remain central goals.

Scaling with tighter controls

The report frames the next phase of agentic AI adoption around governance and control. Teams point to the need for shared factual signals, standardized metrics, and consistent guardrails that guide autonomous actions. Observability functions as the mechanism that ties these elements together across the AI lifecycle.

“Organizations are not slowing adoption because they question the value of AI, but because scaling autonomous systems safely requires confidence that those systems will behave reliably and as intended in real-world conditions,” said Alois Reitbauer, Chief Technology Strategist at Dynatrace.

Agentic AI deployments expand the operational attack surface and increase dependence on monitoring, validation, and oversight. As more projects reach production, trust becomes an operational requirement supported by tooling, process, and human judgment working in concert.