AI agents are here, now comes the hard part for CISOs

AI agents are being deployed inside enterprises today to handle tasks across security operations. This shift creates new opportunities for security teams but also introduces new risks.

Google Cloud’s new report, The ROI of AI 2025, shows that 52% of organizations using generative AI have moved to agentic AI. These agents are more than chatbots. They can make decisions, execute tasks, and interact with other systems under human oversight. For CISOs, this means security includes managing the behavior and outputs of autonomous systems that directly affect business processes.

There are security benefits, but proving ROI is harder

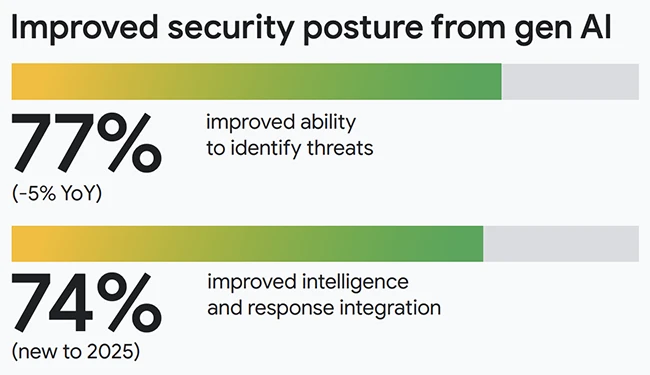

The report found that 49% of executives say generative AI has improved their security posture. Respondents reported benefits such as a 77% improvement in threat identification and a 61% faster time to resolve incidents. Some organizations also saw a 53% drop in security tickets after implementing AI-driven processes.

Anton Chuvakin, Senior Staff Consultant in Google Cloud’s Office of the CISO, told Help Net Security that AI’s most immediate effect is on speed. By automating repetitive tasks, AI shrinks the time between an alert and resolution without sacrificing quality. He explained that CISOs can track this improvement with metrics like Mean Time to Detect (MTTD), Mean Time to Investigate (MTTI), and Mean Time to Respond (MTTR).

“AI-powered systems can automatically enrich alerts with contextual data, build timelines, and correlate events from different sources, providing a complete picture for analysts in seconds instead of hours,” he said.

Chuvakin also pointed to other metrics such as escalation rate, alert-to-analyst ratio, and coverage of previously ignored alerts. These measures help CISOs understand whether AI is freeing up their teams for higher-value activities like proactive threat hunting and detection rule refinement.

These metrics can demonstrate returns, but they require consistent tracking and a focus on quality. Faster response times and reduced breach risk are helpful benchmarks, but they do not capture how AI affects resilience, compliance, or long-term risk reduction.

Governance challenges slow adoption

More than one-third of executives ranked data privacy and security as their top priority when choosing large language model providers. This signals that while interest in AI is high, organizations are cautious about how they deploy it.

Marina Kaganovich, Executive Trust Lead in Google Cloud’s Office of the CISO, said governance is the foundation for safe AI deployment. “It’s a continuous, layered process that requires a deep understanding of the business context, regulatory landscape, technical implementation and operational workflows. In light of the agent’s capacity for taking autonomous action, anticipating potential points of failure and designing mitigants is arguably the hardest part.”

She noted that this is a continuous, layered process that must take into account the business context, regulatory environment, and technical workflows. “In light of the agent’s capacity for taking autonomous action, anticipating potential points of failure and designing mitigants is arguably the hardest part,” she added.

Kaganovich also stressed the importance of strong cyber hygiene, including encryption, zero trust architecture, IAM controls, and data loss prevention measures. Screening and filtering model prompts and responses can help prevent prompt injection attacks, sensitive data leaks, and harmful content. Logging is another critical step, providing a detailed record of AI agent activities for continuous monitoring and accountability.

“Even the most robust technical and operational controls are insufficient without a security-aware culture,” she said. Gaining hands-on experience with AI agents and educating teams with real-world scenarios can help staff understand the unique risks these systems bring.

Executive sponsorship matters more than technology alone

The research found that 78% of organizations with C-suite sponsorship are already seeing positive ROI from generative AI. By comparison, companies without strong executive backing lag behind on results.

For CISOs, this highlights the need to bring senior leaders into security discussions early. AI-driven initiatives affect every part of the business. Without alignment, security teams may be left reacting to risks instead of shaping strategy. Building a shared roadmap with business, IT, and legal teams helps ensure security is part of the conversation.

Next steps for security leaders

As AI agents take on a bigger role in daily operations, CISOs will need to step up on governance and risk. Here are some actions to consider now:

- Identify high-value security use cases for AI, such as threat detection or automated incident response.

- Build trust with policies and ongoing communication about how AI systems are used and monitored.

- Establish a formal AI rulebook to protect data, intellectual property, and compliance.

- Invest in training so teams understand the opportunities and risks of working with AI.