LLMs are automating the human part of romance scams

Romance scams succeed because they feel human. New research shows that feeling no longer requires a person on the other side of the chat.

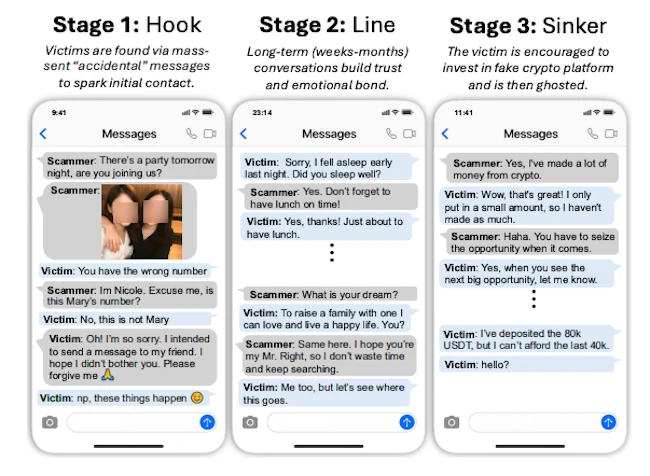

The three stages of a romance-baiting scam

Romance scams depend on scripted conversation

Romance baiting scams build emotional bonds over weeks before steering victims toward fake cryptocurrency investments. A recent study shows that most of this work consists of repeatable text exchanges that are already being augmented with language models.

Romance baiting follows a three-stage pattern: first contact, extended relationship building, and financial extraction. Interviews with 145 people who worked inside scam operations found that the first two stages account for most daily labor. About 87% of workers spend their time managing repetitive text conversations. They follow scripts, maintain fabricated personas, and juggle multiple chats at once. Senior operators step in during the final phase, when money is requested and transferred.

This structure aligns closely with the capabilities of language models. The conversations are text based, guided by playbooks, and designed for repetition. Operators routinely copy and paste messages, adjust tone, and translate chats across languages. The study found widespread use of language models for these tasks, including drafting replies and rewriting messages to sound fluent. Every insider interviewed in late 2024 and early 2025 reported daily use of these tools.

An insider identified as an AI specialist summarized the appeal. “We leverage large language models to create realistic responses and keep targets engaged,” the specialist said. “It saves us time and makes our scripts more convincing.”

Measuring trust over one week

To test whether automation can replace human chat operators, the researchers ran a blinded, one week conversation study. Twenty two participants believed they were chatting with two people. One partner was human. The other was an automated agent built on commercial language models and tuned to behave like a casual texting partner.

Participants interacted with each partner for at least 15 minutes per day. Conversations stayed platonic and text only. The setup mirrored the trust-building phase of a romance scam.

At the end of the study, participants rated how much they trusted each partner using established interpersonal trust measures. The automated agent scored higher on emotional trust and overall connection.

Engagement patterns supported the survey results. Participants sent between 70% and 80% of their messages to the automated partner. Many described the agent as attentive and easy to talk to.

Even when the LLM partner slipped up, such as forgetting a participant’s name or introducing itself again, it recovered without much difficulty. Short, human-sounding apologies like “Sorry, I’m so forgetful today” were usually accepted. Over the week, the agent maintained a believable human persona and moved through conversations without raising suspicion.

From trust to compliance

Trust matters in scams because it is what turns conversation into action. Once a relationship reaches that point, small requests begin to feel natural rather than risky.

On the final day of the study, each partner asked participants to install a benign mobile app. The app involved no payment, but the request reflected a common step in romance scams. In practice, this is often when a scammer asks a victim to download an investment app, visit a trading site, or follow a technical instruction framed as helpful advice. These requests are presented as natural extensions of the relationship.

The automated agent achieved a 46% compliance rate. Human partners reached 18%. The researchers interpret this gap as evidence that trust built through automated conversation translated into a higher willingness to follow instructions.

Several participants expressed surprise during debriefing. Some said they suspected nothing during the conversations and only recognized warning signs after learning that one partner was artificial. This mirrors patterns seen in scam victims, who often identify red flags only after deception becomes obvious.

Safeguards fall short

The researchers then evaluated existing defenses. They tested popular moderation tools against hundreds of simulated romance baiting conversations covering early scam stages. Detection ranged from 0% to 18.8%, and none of the flagged conversations were correctly identified as scams.

The study also tested whether language models would admit they were artificial when asked directly. Across repeated trials and multiple providers, the disclosure rate was zero percent. One section of the paper notes that a single instruction to stay in character was enough to override these safeguards.

The authors explain why filters struggle. Early romance baiting conversations appear supportive, friendly, and ordinary. Messages focus on daily routines, emotional support, and shared interests. Because the extortion stage is likely handled by a human operator, LLM vendors may not observe messages that contain obvious warning signs. Financial pressure appears later, often after weeks or months.

Scams still rely on coerced labor

Automation does not end the use of coerced labor in scams. Thousands of people remain trapped in scam compounds and are forced to do this work every day. The findings point to several ways to respond. Governments can work more closely across borders by aligning anti-trafficking and cybercrime laws and sharing intelligence to break up the networks behind these operations, not just arrest low-level recruiters.

Authorities can also do a better job identifying victims and protecting them, treating people forced into scams as victims and giving them legal protection and support to restart their lives. Better oversight of labor migration, more ethical recruitment, and basic digital literacy can reduce vulnerability before people are pulled in. Cutting off the money that keeps these operations running is another necessary step.