From experiment to production, AI settles into embedded software development

AI-generated code is already running inside devices that control power grids, medical equipment, vehicles, and industrial plants.

AI moves from experiment to production

AI tools have become standard in embedded development workflows. More than 80% of respondents to a new RunSafe Security survey say they currently use AI to assist with tasks such as code generation, testing, or documentation. Another 20% say they are actively evaluating AI. No respondents report avoiding AI entirely.

The study shows varying depth of use. About half describe their AI integration as moderate, while more than a quarter report extensive use. Only a small fraction say they have no AI usage at all. This progression reflects a shift from trial projects to routine reliance.

Testing leads early AI use

Teams are selective about where AI fits into the development lifecycle. Testing and validation rank as the most common use cases, cited by 28% of respondents. Code generation follows at 19%, with deployment automation and documentation close behind. Security scanning appears lower on the list at 10%.

The survey points to cross-functional use. Product teams use AI to explore requirements, engineers integrate AI-suggested code into firmware, and security teams apply AI to scan software more quickly. These patterns show AI contributing directly to systems that control physical processes.

Production deployments accelerate

AI-generated code is already running in operational environments. 83% of respondents say they have deployed AI-generated code into production systems, either broadly or in limited cases. Nearly half report using AI-generated code across multiple systems.

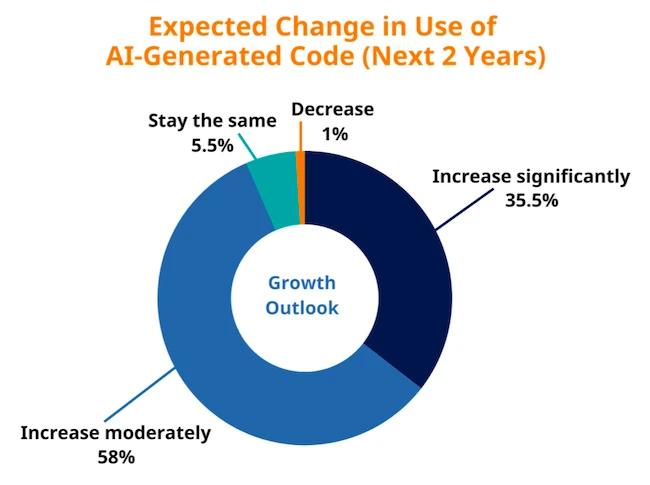

Future growth appears locked in. 93% expect their use of AI-generated code to increase over the next two years, with over a third predicting significant growth. Only a small minority expect usage to remain steady or decline.

Security concerns center on AI-generated code

Security tops the list of worries tied to AI use. 53% of respondents cited security as their primary concern with AI-generated code. Debugging and maintainability followed, along with regulatory uncertainty and reuse of insecure patterns.

When asked to rate cybersecurity risk, 73% described the risk from AI-generated code as moderate or higher. Most placed it in the moderate range. This reflects a view that the risk is meaningful and persistent within current development practices.

Confidence levels appear high in detection capabilities. 96% expressed confidence in their ability to find vulnerabilities in AI-generated code using existing tools.

At the same time, one third of organizations reported experiencing a cyber incident involving embedded software in the past year. These incidents were not attributed directly to AI, but they occurred in environments shaped by faster development cycles and rising code complexity.

Runtime defenses become a central focus

Some teams view runtime security as essential for handling AI-generated code. Runtime monitoring and exploit mitigation tools are widely used, reflecting a shift toward continuous protection after deployment.

Memory safety stands out as a persistent issue. Memory-related flaws account for most embedded software vulnerabilities. While some teams use memory-safe languages, traditional languages such as C and C++ remain common. AI systems trained on existing embedded code are likely to reproduce similar patterns, increasing reliance on runtime protections.

Respondents consistently rated runtime protection as highly important for AI-generated code. Teams see runtime controls as a way to limit the impact of vulnerabilities that reach production systems.

Security practices rely on multiple layers

No single security method dominates. Teams combine dynamic testing, runtime monitoring, static analysis, manual code review, and external audits. This layered approach reflects recognition that AI increases code volume beyond what manual processes can cover alone.

Manual patching remains common in many environments. In large deployments, this slows response times and extends exposure. Runtime exploit mitigation tools help reduce risk during these gaps by limiting exploit paths while patches are prepared.

Another concern involves shared vulnerability intelligence. AI-generated code tends to be more customized, which reduces reuse of common libraries and patterns. This makes it harder for fixes discovered in one system to apply broadly across others.

Regulations remain fragmented

Regulatory pressure varies by sector. Many teams rely on internal standards, especially where external guidance does not address AI-generated code. Automotive teams most often follow established automotive cybersecurity standards. Industrial and energy sectors reference a mix of frameworks and government guidance.

This patchwork reflects the fact that many standards were written before AI-assisted development became common. Security teams are filling the gaps with internal rules tailored to their environments.

Spending follows risk awareness

Security investment plans show strong momentum. Most organizations expect to increase spending on embedded software security over the next two years.

When asked where improvements would help most, respondents pointed to automated code analysis, AI-assisted threat modeling, and runtime exploit mitigation. These priorities align with the challenges described throughout the report. AI accelerates development, increases code volume, and introduces new patterns. Security teams respond by seeking automation and controls that operate continuously.

“AI will transform embedded systems development with teams deploying AI-generated code at scale across critical infrastructure, and we see this trend accelerating,” said Joseph M. Saunders, CEO of RunSafe Security. “