VMware Data Recovery 1.2 released

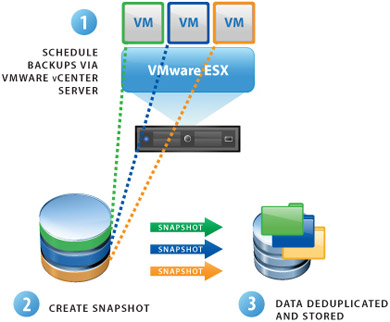

VMware Data Recovery protects against data loss in your virtual environment by enabling backups to disk as well as fast and complete recovery.

Resolved issues

The following issues have been resolved since the last release of Data Recovery.

High DRS Or vMotion Activity on Protected Virtual Machines Causes Unnecessarily High CPU Utilization

When a backup appliance protected virtual machines that were being significantly affected by vMotion or DRS, an unnecessarily large number of Data Recovery objects occurred in memory, causing high CPU utilization. This occurred because Data Recovery interpreted the results of vMotion or DRS operations as an increase in the number of objects, rather than a moving of objects. This issue has now been resolved.

If vCenter Server Becomes Unavailable Data Recovery Permanently Loses Connectivity

If a vCenter Server was rebooted or lost network connectivity while Data Recovery was conducting backups, Data Recovery failed to re-establish connectivity with the vCenter Server until after currently running backup jobs completed. This caused all new backup operations to fail and the vSphere Client plug-in could not connect to the engine during this time. Data Recovery now attempts to reconnect to the vCenter Server at regular intervals. This occurs while backups are in progress, thereby minimizing potential failures.

Data Recovery Backups May Fail To Make Progress At The Start Of The Task

During incremental backup of a virtual machine, Data Recovery would fail to make progress and the backup appliance would show 100% CPU. This condition persisted until the appliance is restarted. Even after restarting the backup appliance, no further progress was made on the incremental backup. This was the result of how the backup appliance used information about the last backup to create a new backup.

The Backup Appliance Crashes When Backing Up Certain Disk Configurations

Virtual machines can have disks that are not multiples of single megabytes. For example, it is possible to create a disk that is 100.5 MBs. Normally, disks created using the vSphere Client are always a multiple of one MB. Some virtual machines that included disks whose size was not of multiple of one MB caused the backup appliance to crash. These disk sizes are now handled properly.

Data Recovery Does Not Properly Track Individual Disks For Backup

Data Recovery supports backing up a subset of disks in a virtual machine. Due to the way individual disks are tracked for backup, if a snapshot changed the name of the disk, problems occurred. In such a case, Data Recovery did not match the disk with the one selected in the backup job, showed the disk as selected, and did not back up the disk. This issue no longer occurs.

Data Recovery Fails To Check Disk Hot Add Compatibility And To Clean Up After Failures

Data Recovery attempts to hot add the disks of the virtual machine being backed up to the backup appliance. It could occur that the datastore block size of the datastore hosting the client disks was bigger than the datastore block size of the appliance. If this was the case and if the disk size of the virtual machine’s disk was larger than the size supported by the appliance, the hot add failed. This failure could occur after the successful hot adding of some disks. In such a case, Data Recovery did not hot remove the virtual machine’s successfully added disks. Data recovery now checks datastore block sizes and disk sizes to ensure hot add operations will complete successfully before attempting the hot add.

Data Recovery Does Not Support Longer CIFS Passwords

Data Recovery 1.1 did not support CIFS password over 16 characters. With this release, VDR support CIFS passwords of up to 64 characters.

The Backup Appliance Crashes If Disks Become Full

In some cases, Data Recovery crashed if destination disks became full during backup. This no longer occurs.

Last Execution Shows Incorrect Time Stamp For Completed Jobs

The backup tab displays information about backups including the last time each backup job was completed. The displayed last date and time that jobs were completed was incorrect. This has been fixed.

Data Recovery vSphere Client Plug-In Failed to Connect to vCenter Server

Attempts by the vSphere Client plug-in to connect to the backup appliance failed if the vSphere Server’s inventory contained a large number of virtual machines. This typically occurred when more than 1000 virtual machines were present in the inventory. This issue has been fixed.

Adding Virtual Disks Causes Problems With Snapshots And Subsequent Backups

If a virtual machine had an existing snapshot and a new virtual disk is added to the virtual machine, the next backup succeeds, but the snapshot was left behind. This snapshot caused subsequent backups to fail. This issue has been resolved.

Backups Failed to Complete As Expected

In certain situations, backups would fail to complete as expected, and no subsequent backups would occur. This issue has been resolved.

Restore Wizard Does Not Enforce Valid Datastore Selections

Using the restore wizard, it was possible to specify datastore locations to which to restore virtual machines where the datastore was not valid. Wizards have been modified so only valid selections are offered.

File Level Restore (FLR) In Admin Mode Mishandles Some Password Characters

FLR in admin mode failed if password contained ‘/’. This occurred because FLR was using the ‘/’ character as a delimiter. As a result, passwords that included ‘/’ resulted in incomplete passwords being sent to vCenter Server for authentication. FLR now handles ‘/’ in passwords correctly.

FLR Fails To Mount Virtual Disks Due To Connection Delimiter

In some cases, mounting a virtual disk through the FLR client failed due to a connection delimiter. This has been fixed.

Windows FLR Fails To Mount Multiple Disks With The Same Name

It is possible to back up a virtual machine with multiple disks with the same name. In such a case, each disk is associated with a vmdk located on a different datastore. when attempting to mount such disks through the Windows FLR client, only one of the virtual disks was mounted. This has been fixed and all disks will now be mounted.