Open-source flow monitoring with SENSOR: Benefits and trade-offs

Flow monitoring tools are useful for tracking traffic patterns, planning capacity, and spotting threats. But many off-the-shelf solutions come with steep licensing costs and hardware demands, especially if you want to process every packet. A research team at the University of Tübingen has built an alternative: an open-source, cost-effective, and distributed platform for collecting unsampled IPFIX data.

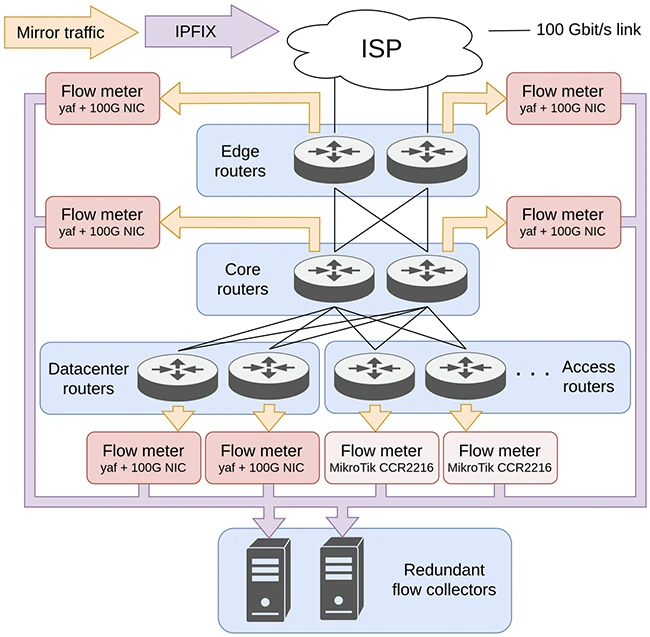

Their system, called SENSOR, uses open-source software and vendor-agnostic components to monitor traffic at multiple points in the university’s network. This setup captures internal flows that would otherwise go unnoticed if monitoring were only done at the perimeter.

The approach avoids the need for expensive routers with built-in flow exporters and instead uses mirror ports and general-purpose servers running software-based flow meters.

Flow monitoring platform at the University of Tübingen

How it works

At the core of the platform are two types of flow meters: high-performance servers running the open-source tool yaf, and MikroTik routers using a custom configuration to generate IPFIX from mirrored traffic.

For backbone and datacenter traffic, the team uses servers with 100 Gbit/s NICs and yaf configured to use the PF RING library for packet processing. yaf supports plugins for deep packet inspection, application labeling, and even DHCP-based OS identification.

For access-level monitoring, the team turned to MikroTik routers. These devices don’t normally support passive flow monitoring from mirror ports. But the researchers found a workaround. By creating a bridge and forcing traffic through RouterOS’s firewall stack, they were able to get MikroTik’s “traffic flow” feature to generate usable IPFIX data, even though the packets weren’t originally forwarded by the device. This lets them use a relatively low-cost router with a powerful CPU to collect flow data without modifying the rest of the network.

Collecting and processing flows

On the collection side, the platform uses several tools in parallel: nfacctd to replicate flows, nfdump and SiLK for analysis and storage, and GoFlow2 for format conversion. GoFlow2 standardizes flows into JSON or Protobuf and sends them to Apache Kafka, which lets downstream components process them as events. The team also uses flowpipeline to build analysis workflows, including anonymization and metrics extraction.

By mixing and matching collectors, the system can support different use cases and allow for custom pipelines. Each component is replaceable and modular, which helps tailor the platform to performance needs or analysis goals.

Why it matters

Most flow monitoring setups rely on embedded flow meters that are locked to a vendor and require powerful, expensive devices. SENSOR shows it’s possible to build a flexible and scalable alternative using only open tools and commodity hardware. It also allows operators to monitor internal traffic more comprehensively, not just what crosses the network border.

Boris Lukashev, CTO at Semper Victus & InferSight, told Help Net Security that the ability to collect unsampled flows with open tools is valuable, but context matters.

“Sampling works well for network engineering but is less effective for security operations. Vendor approaches vary, and if someone exfiltrates a few bytes over a segment sampled at 100:1, you might miss it entirely. Some vendors still capture it even when ‘off-sample’ because it’s stored in the device’s internal flow tables.”

Lukashev says the paper makes a fair point, but the pipeline the researchers built feels heavier than necessary unless you need advanced enrichment with deterministic latency.

“Full line-rate NIDS taps are typically beyond the scope of most network engineering teams that support security, and they require architectural planning before purchase to be effective. Flow collection is much simpler as long as it starts at the actual packet processing point, whether that’s the dataplane, ring 0, or wherever the work happens,” Lukashev explained.

The system is already deployed at the University of Tübingen, and the team plans to expand their work by benchmarking the performance limits of the flow meters and packet processing libraries involved. Understanding where those limits lie will help ensure that monitoring stays unsampled even at higher traffic volumes.

Scaling beyond small networks

David Montoya, Global Business Development Manager OT/IoT at Paessler, calls SENSOR “a great example of how to use open-source technologies to overcome a lack of enterprise-grade equipment while still granting access to in-depth traffic analysis.” But he also cautions that it is “based on technologies that are not the first choice for a large network or an ISP.”

While MikroTik routers play a key role in SENSOR, David points out that these devices are aimed at small and medium-sized businesses rather than large enterprises. They fill routing gaps effectively and affordably, but he sees limited use for them at scale compared to equipment from Cisco, Juniper, Huawei, or Nokia.

He notes that enterprise-class routers from the major vendors already ship with embedded IPFIX, NetFlow v9, or jFlow, along with the processing power to handle traffic sampling. These can connect directly to collectors and integrate with other monitoring protocols such as SNMP for volume tracking, RMON for data corruption detection, and QoS tools for measuring jitter and packet loss. Together, these provide a broader operational view.

The limitation with SENSOR, he says, is that while it offers detailed flow visibility, it doesn’t unify these other protocols in one interface. For a large network, that can make troubleshooting and oversight more complex. “Something like this is fine for small networks,” David explains, “but it certainly complicates troubleshooting and oversight on larger networks.”

David also sees potential for SENSOR to expand beyond historical analysis by adding real-time alerting. “The paper doesn’t describe whether the flow collectors can trigger alarms for anomalies like rapidly spiking UDP traffic, which could indicate a DDoS attack in progress. Adding real-time triggers like this would be a valuable enhancement that makes SENSOR more operationally useful for network teams.”

Hardware and performance caveats

Leo Valentić, CEO at RESILIX, highlights hardware-related constraints in SENSOR’s design. “The main limitation is that the MikroTik bridge workaround sends mirrored traffic through the CPU instead of dedicated switching hardware. This limits performance, makes the setup less robust, and creates a bottleneck in high-throughput environments that can lead to dropped packets or incomplete flow data, which undermines the goal of unsampled monitoring.”

Valentić notes there is also a risk of drops or inconsistencies in flow exports, since the device is not designed to handle passive mirrored traffic this way and can behave unpredictably under load.

“Finally, the approach is fragile. It relies on precise bridge and firewall configurations to push traffic through the RouterOS stack, which makes it sensitive to updates, misconfigurations, or hardware changes. In production environments where reliability is critical, this would be hard to justify,” he concluded.

For now, SENSOR is best seen as a capable, budget-friendly option for historical analysis and targeted monitoring, with room to grow if real-time alerting and greater scalability are added.

Must read:

- 35 open-source security tools to power your red team, SOC, and cloud security

- GitHub CISO on security strategy and collaborating with the open-source community

Subscribe to the Help Net Security ad-free monthly newsletter to stay informed on the essential open-source cybersecurity tools. Subscribe here!