OpenAI’s gpt-oss-safeguard enables developers to build safer AI

OpenAI is releasing a research preview of gpt-oss-safeguard, a set of open-weight reasoning models for safety classification. The models come in two sizes: gpt-oss-safeguard-120b and gpt-oss-safeguard-20b. Both are fine-tuned versions of the gpt-oss open models and available under the Apache 2.0 license, allowing anyone to use, modify, and deploy them freely.

OpenAI developed the models in collaboration with Discord, SafetyKit, and Robust Open Online Safety Tools (ROOST).

What these models do

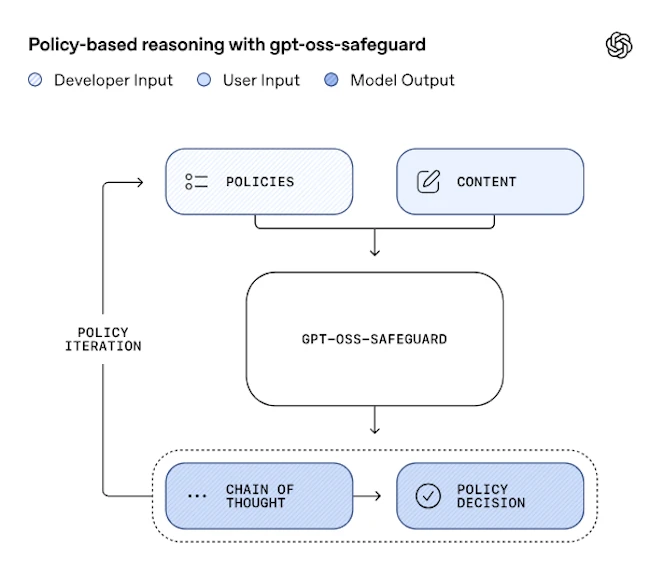

gpt-oss-safeguard uses reasoning to apply a developer-provided policy at inference time, classifying user messages, completions, or chats based on that policy. Developers can choose and change the policy as needed, keeping results aligned with specific use cases. The model also produces a transparent chain of thought, allowing developers to see how it reached each decision.

Because the policy is supplied during inference instead of during training, teams can update policies quickly. This approach, first built for OpenAI’s internal use, is more flexible than training a fixed classifier on large labeled datasets.

How it works

The model takes two inputs: a policy and the content to classify. It outputs a policy decision and its reasoning. Developers choose how to use those decisions in their safety pipelines. This setup is useful when:

- Potential harms are new or changing and policies must adapt quickly.

- The domain is complex and difficult for smaller classifiers to capture.

- There aren’t enough samples to train reliable classifiers for each risk.

- Latency matters less than getting accurate, explainable labels.

The preview release is intended to gather feedback from the research and safety community and to help improve model performance.

System-level safety and the role of classifiers

OpenAI uses a defense-in-depth approach to safety. Models are trained to respond safely, with extra layers that detect and handle potentially unsafe inputs and outputs under policy. Safety classifiers, which separate safe from unsafe content in a given area, are still a key part of OpenAI’s system and many others.

Traditional classifiers learn from thousands of labeled examples based on predefined policies. They work well with low latency and cost, but collecting data takes time, and updating a policy usually means retraining the model.

gpt-oss-safeguard takes a different approach by reasoning directly over any provided policy, including new or external ones, and adapting to new guidelines. Beyond safety, it can also label content in other policy-defined ways relevant to different products and platforms.

OpenAI’s models learn to reason about safety

OpenAI’s main reasoning models now learn safety policies directly and reason about what is safe, an approach called deliberative alignment. It builds on earlier safety training and helps keep reasoning models safe as their capabilities grow. Reasoning also strengthens defense in depth because it is more adaptable than fixed training, though it requires more compute and time.

gpt-oss-safeguard is based on an internal tool called Safety Reasoner. OpenAI trained it with reinforcement fine-tuning on policy-labeling tasks, rewarding consistent judgments and teaching the model to explain them.

Safety Reasoner enables OpenAI update safety policies in production faster than retraining a classifier. It supports iterative deployment: new models often launch with stricter policies and more compute for careful application, then policies are adjusted as real-world understanding improves.

Limitations in performance and deployment

OpenAI highlights two main limitations for gpt-oss-safeguard:

- Classifiers trained on tens of thousands large, high-quality datasets can still outperform it when dealing with complex or nuanced risks. For use cases that demand maximum precision, a dedicated classifier may still be a better choice.

- Reasoning takes more compute and time, which makes it harder to use at large scale or in real-time applications.