AI code looks fine until the review starts

Software teams have spent the past year sorting through a rising volume of pull requests generated with help from AI coding tools. New research puts numbers behind what many reviewers have been seeing during work.

The research comes from CodeRabbit and examines how AI co-authored code compares with human written code across hundreds of open source projects. The findings track issue volume, severity, and the kinds of problems that appear most often. The data shows recurring risks tied to logic, correctness, readability, and security that matter directly to security and reliability teams.

“These findings reinforce what many engineering teams have sensed throughout 2025,” said David Loker, Director of AI, CodeRabbit. “AI coding tools increase output, but they also introduce predictable, measurable weaknesses that organizations must actively mitigate.”

A larger pile of issues per pull request

The study analyzed 470 GitHub pull requests reviewed using automated tooling. Of those, 320 were labeled as AI co-authored and 150 were treated as human authored. Findings were normalized to issues per 100 pull requests to allow consistent comparisons.

AI assisted pull requests generated about 1.7 times more issues overall. On average, AI pull requests contained 10.83 findings compared with 6.45 in human submissions. The distribution also showed wider swings. At the 90th percentile, AI pull requests reached 26 issues per change, more than double the human baseline.

This translated into heavier review loads and more time spent triaging changes before merge. Higher issue counts appeared repeatedly across the dataset, rather than clustering in a small number of outliers.

Severity rises along with volume

Severity increased alongside total findings. When normalized per 100 pull requests, critical issues rose from 240 in human authored code to 341 in AI co-authored code, an increase of about 40%. Major issues climbed from 257 to 447, an increase of roughly 70%.

Minor and trivial issues also increased, adding to reviewer workload. More findings combined with higher severity meant that AI assisted changes required closer inspection to prevent defects from reaching production systems.

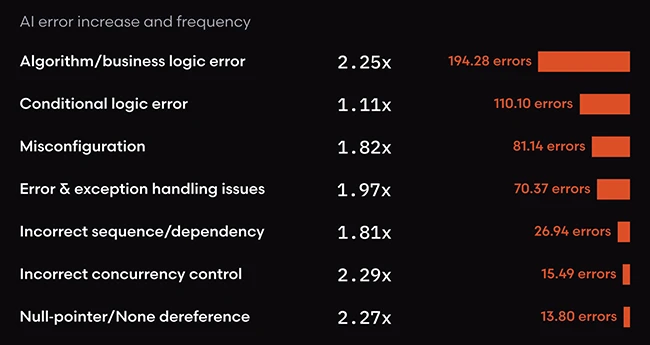

Logic and correctness dominate the gap

Logic and correctness issues formed the largest category difference. These included business logic errors, misconfigurations, missing error handling, and unsafe control flow. AI co-authored pull requests produced about 75% more logic and correctness findings overall.

Algorithm and business logic errors appeared more than twice as often in AI generated changes. Error and exception handling gaps nearly doubled. Issues tied to incorrect sequencing, missing dependencies, and concurrency misuse also showed close to twofold increases.

Null pointer and similar dereference risks followed the same pattern. These findings connect directly to incident risk, especially in services with complex control paths or shared state.

Readability and maintainability slow teams down

The largest relative increases appeared in code quality categories that affect review speed and long term maintenance. Readability issues occurred more than three times as often in AI co-authored pull requests. Formatting problems appeared at nearly triple the human rate. Naming inconsistencies showed close to a twofold increase.

These issues rarely caused immediate outages, though they increased cognitive load for maintainers and reviewers. Over time, they contributed to harder audits, slower onboarding, and greater friction during security reviews.

Security findings rise across common weakness types

Security related findings also increased consistently in AI assisted code. Overall security issues were about 1.5 times higher. Improper password handling stood out, with AI pull requests showing nearly double the frequency. These findings included hardcoded credentials, unsafe hashing choices, and improvised authentication logic.

Insecure object references appeared almost twice as often. Injection style weaknesses, including cross site scripting, also rose. Insecure deserialization findings increased by roughly 80%.

These weaknesses align with familiar categories for security teams. Their higher frequency in AI generated code increases exposure when reviews fail to catch them.

Performance issues appear less often but spike sharply

Performance issues appeared less often overall, though AI generated code showed a sharp increase in certain patterns. Excessive input output operations occurred nearly eight times more often in AI co-authored pull requests. Examples included repeated file reads and unnecessary network calls.

These patterns can degrade system behavior under load and complicate capacity planning.

Areas where humans still stumble

A small number of categories showed higher rates in human authored code. Spelling errors appeared more often in human pull requests, likely reflecting heavier use of comments and documentation. Testability issues also appeared slightly more often in human submissions.

These differences did not change the broader trend across correctness, security, and maintainability categories.