Privacy teams feel the strain as AI, breaches, and budgets collide

Privacy programs are under strain as organizations manage breach risk, new technology, and limited resources. A global study from ISACA shows that AI is gaining ground in privacy work, with use shaped by governance, funding, and how consistently privacy is built into systems.

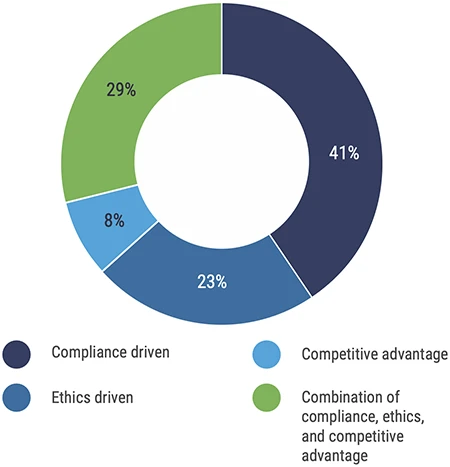

Board view of privacy programs (Source: ISACA)

AI use follows maturity, not urgency

Only a small number of organizations currently use AI for privacy tasks such as data discovery, risk assessment, and monitoring. Many others plan to explore it over the next year, often as part of broader efforts around automation and risk management. The study suggests that AI adoption tends to appear in organizations where privacy programs already have leadership backing, defined roles, and established processes.

Where boards prioritize privacy, AI use appears more frequently and follows defined direction. Larger enterprises, particularly those with broader risk and compliance functions, also report higher uptake. In smaller organizations, or those where privacy has limited visibility at the leadership level, AI adoption remains tentative.

Teams that apply privacy principles throughout system development report higher use of AI for privacy tasks. In these environments, AI supports ongoing work rather than introducing new approaches. The findings point to AI as a tool that builds on existing discipline, with adoption shaped by program maturity rather than pressure to automate.

Privacy by design remains uneven

Privacy and security teams say privacy by design is part of their development process at least some of the time. Consistent use is less common. Fewer teams report applying privacy by design across all projects, and that share has declined compared with earlier surveys. The findings point to uneven adoption across organizations and development teams.

Respondents working in organizations where privacy has active board backing report more consistent use of privacy by design. Budget stability shows a similar pattern, with better-funded teams reporting stronger integration of privacy into design and engineering work.

The study also shows that privacy by design on its own does not stop breaches. Organizations that experienced breaches report similar levels of design practice as those that did not. The data places privacy by design mainly in a governance and compliance role, with limited connection to incident prevention.

Breaches remain part of the landscape

Privacy breaches continue across industries and regions. Many privacy and security teams report dealing with a breach in the past year, and expectations for future incidents remain unsettled. Some organizations expect continued exposure, while others anticipate little change, reflecting uneven risk conditions across sectors.

Governance shapes how teams view that risk. Professionals in organizations where privacy lacks board priority report higher expectations of a breach in the coming year. Gaps between privacy strategy and broader business goals also appear alongside higher breach expectations, suggesting that structural alignment influences outlook as much as technical controls.

Confidence remains common, even among organizations that have experienced breaches. Survey participants report confidence in their ability to protect sensitive data after handling an incident. The findings suggest that this confidence often reflects formal controls and documented policies, with limited evidence that those controls have been tested under sustained pressure.

Training gaps contribute to risk

Training emerges as a common weakness in privacy programs. Survey participants frequently cite inadequate or outdated training as a source of privacy failure. Organizations offer privacy awareness training, though updates and measurement vary.

Organizations that align privacy goals with broader business objectives tend to track training effectiveness more closely. These teams use multiple indicators to assess whether staff understand privacy obligations and incident reporting requirements.

Where alignment is weaker, training oversight declines. The study links this gap to higher stress levels among privacy staff and increased operational risk.

Pressure continues for privacy teams

Respondents report rising stress tied to fast-changing technology, compliance demands, and staffing constraints. Teams that experienced recent breaches report greater difficulty retaining skilled privacy professionals, adding to operational strain.

The research shows that organizations using AI still report stress related to budgets and staffing. AI shows better results when introduced into programs with strong leadership support and stable processes.

“The pressing challenges that privacy professionals face in an increasingly complex data privacy threat landscape and regulatory environment underscore how critical it is for organizations to dedicate the necessary resources to support privacy teams in their vital work,” says Safia Kazi, ISACA principal research analyst – privacy.