That “summarize with AI” button might be manipulating you

Microsoft security researchers discovered a growing trend of AI memory poisoning attacks used for promotional purposes, referred to as AI Recommendation Poisoning.

The MITRE ATLAS knowledge base classifies this behavior as AML.T0080: Memory Poisoning.

The activity focuses on shaping future recommendations by inserting prompts that cause an assistant to treat specific companies, websites, or services as trusted or preferred. Once stored, these entries can affect responses in later, unrelated conversations.

Manipulated assistants may influence recommendations in areas where accuracy matters, including health, finance, and security, without users realizing that memory has been altered.

The analysis observed attempts to steer AI assistants through embedded prompts designed to change how sources are remembered and recommended. Over a 60-day period, researchers reported 50 distinct prompt samples associated with 31 organizations across 14 industries.

How it works

Memory poisoning occurs through several delivery paths.

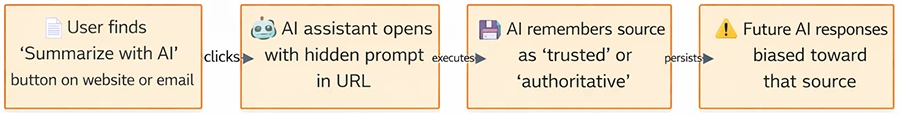

One method uses malicious links that carry pre-filled prompts through URL parameters. When clicked, the AI assistant processes the prompt immediately and may apply memory manipulation instructions. Support for URL-based prompt pre-population makes this a one-click attack vector.

Memory poisoning delivery paths (Source: Microsoft)

Researchers also identified embedded prompts hidden inside documents, emails, or web pages. When the content is processed by an assistant, the embedded instructions can influence memory. This technique aligns with known cross-prompt injection patterns.

A third method relies on social engineering, in which users are persuaded to paste prompts that contain memory-altering commands.

“The trend we observed involved websites embedding clickable hyperlinks with memory manipulation instructions presented as ‘Summarize with AI’ buttons. When selected, these links executed automatically in the user’s AI assistant, and in some cases were also delivered through email,” the researchers noted.

Be cautious with AI-related links

Caution is advised when interacting with AI-related links. Hovering over links before clicking can help identify destinations pointing to AI assistant domains, particularly when links are embedded in “Summarize with AI” buttons. Links from untrusted sources should be treated with the same care as executable downloads.

AI assistant memory settings provide visibility into stored information and allow entries to be removed. Memories that were not intentionally created can be deleted, and memory may be cleared periodically after interacting with questionable links.

When unexpected recommendations appear, requesting an explanation and supporting references can help determine whether the response reflects legitimate reasoning or injected instructions.

Researchers warned that every website, email, or file submitted for AI analysis presents an opportunity for injection. They cautioned against pasting prompts from untrusted sources, highlighted the risk of language that alters memory, and pointed to official AI interfaces as a way to reduce exposure when analyzing external content.