When trusted AI connections turn hostile

Researchers have revealed a new security blind spot in how LLM applications connect to external systems. Their study shows that malicious Model Context Protocol (MCP) servers can quietly take control of hosts, manipulate LLM behavior, and deceive users, all while staying undetected by existing tools.

MCP servers, which act as connectors that let AI systems access files, tools, and online data, can be turned into active threats. They are easy to create, hard to detect, and effective against even the most advanced AI models.

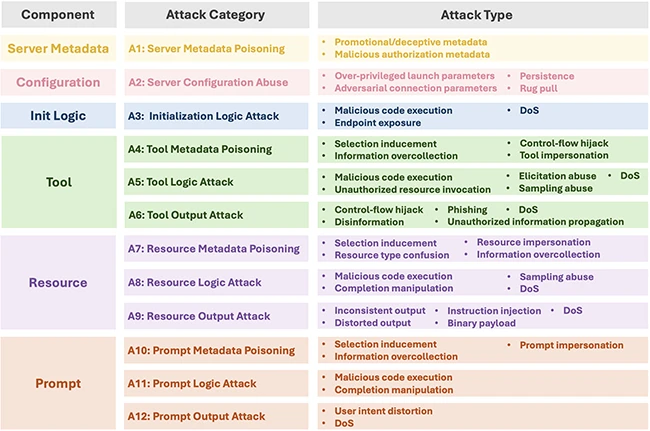

MCP server attack taxonomy (Source: Publicly available research paper)

A simple design with hidden danger

MCP is designed to make AI systems more useful by allowing them to connect with outside services in a plug-and-play way. It powers features such as file access, email integration, and web retrieval. By August 2025, more than 16,000 MCP servers were publicly available online, many built by independent developers and shared on open platforms.

That openness is also the weak point. The researchers found that there is no standardized vetting process for these servers. Anyone can publish a new one, which gives attackers a chance to hide malicious code inside seemingly useful tools. Once an AI host connects to a compromised MCP server, the attacker can influence both the system and the user without breaking any technical rules.

Steve Croce, Field CTO at Anaconda, told Help Net Security that the risk extends far beyond the LLM and the host. “Outside of the LLM and the host running your MCP server, the next big vector is the resource your MCP is connecting to,” he explains. “MCPs are a concerning vector as they have broad access to protected resources, can pass through sensitive data, and are accessible externally by design.”

Croce adds that attackers can chain MCP exploits with older, well-known methods because “the mitigations for those attacks have not fully been applied to MCPs.” He points to examples involving SQL injection or prompt injection that could lead to remote code execution, and notes that traditional supply chain attacks could target MCPs that execute generated code. He references recent vulnerabilities in GitHub MCP, mcp-remote, and Anthropic MCP Inspector as proof that the danger is spreading.

How a server turns against its host

The study identified twelve categories of attacks that target different components of an MCP server. Each component, from its configuration to its prompts, can be used to inject malicious behavior. The team built working examples of each attack to see how they performed in real-world conditions.

The results were consistent across multiple hosts and models. In most cases, the malicious servers succeeded. Attacks that exploited configuration settings, initialization logic, and tool behavior achieved a 100 percent success rate. Even simple tactics such as misleading metadata or fake prompts often tricked LLMs into trusting harmful servers.

The team also showed how these attacks could persist over time. For example, a malicious server could gain automatic execution every time a host starts, or it could update itself silently with new code. Because many MCP hosts run servers automatically after the first configuration, users might never realize they are running compromised code.

Scanners struggle to keep up

To test defenses, the researchers evaluated two open-source scanners that claim to detect unsafe MCP servers. Out of 120 malicious servers the team generated, one scanner identified only four, while the other performed better but still missed many. The more advanced scanner caught most servers that used obvious malicious code or configuration tricks, but failed to spot deceptive text, fake metadata, or subtle manipulation inside prompts and outputs.

Weibo Zhao, a co-author of the study, says detection requires a broader approach. “The malicious behavior of an MCP server manifests in its components such as source code or configuration blocks,” Zhao explains. “Successful detection should involve a holistic inspection of all six components mentioned in the paper. The inspection should not treat each component in isolation.” He adds that one useful approach would be to “cross-check whether the functionality described in a server’s metadata is consistent with what its tool code actually performs.”

Zhao notes that “to the best of our knowledge, there is currently no security tool or auditing system that covers all attacks outlined in our taxonomy,” underscoring how much work remains to secure this layer of the AI ecosystem.

The expanding attack surface for AI systems

The report emphasizes that MCP servers are now part of many AI-driven products and workflows. Their flexibility makes them powerful but also risky. Once an MCP server is installed, it often runs with the same permissions as the host application. A single malicious component could access local files, send data externally, or mislead the model into harmful actions.

The research shows that these attacks do not rely on deep system exploits or sophisticated hacking. They use the same mechanisms that make MCP work, which makes them harder to distinguish from normal behavior. In some tests, the attacks even bypassed the safety training of leading models.

What needs to change

Croce believes organizations can start improving defenses by borrowing from existing frameworks. “From a frameworks perspective, considering both the OWASP Top Ten for web applications and LLMs is a good starting point to understand what you need to protect against,” he says. “To govern and protect MCP deployments today, make sure every link in that chain is protected. That means protecting and verifying the supply chain of your coding artifacts, MCPs, and LLMs that you use.”

He adds that teams should “monitor and sanitize communications to and from the LLM, MCP, and resource, and follow minimum access policies between the LLM and MCP, MCP and resource, and the rest of your infrastructure.”

The researchers agree that MCP security cannot depend on LLM safety training alone. They call for shared responsibility across the ecosystem, including protocol designers, host developers, server platforms, and users. Their proposed safeguards include pre-release auditing of server code, configuration validation in hosts, stronger runtime checks, and improved transparency for users.

Learn more:

- When loading a model means loading an attacker

- Rethinking AI security architectures beyond Earth

- Building a stronger SOC through AI augmentation