What happens when vulnerability scores fall apart?

Security leaders depend on vulnerability data to guide decisions, but the system supplying that data is struggling. An analysis from Sonatype shows that core vulnerability indexes no longer deliver the consistency or speed needed for the current software environment.

A system that no longer keeps pace

The CVE program still serves as the industry’s naming backbone, and the NVD remains a primary source for severity ratings. These tools were built for an era of slower release cycles. They have not kept up with continuous deployment, heavy dependency use, and automated development workflows.

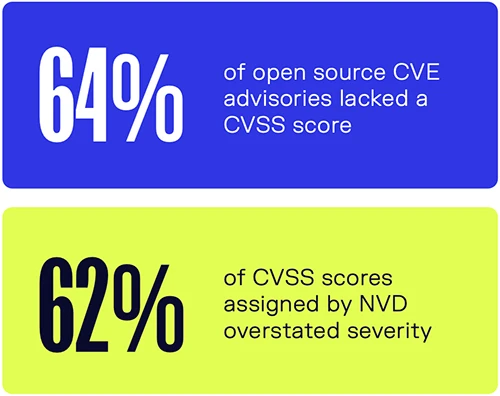

64% of open source CVEs in 2025 had no CVSS score in the NVD. Security teams must choose between assuming high risk or filling gaps on their own. After scoring the missing items, Sonatype found that almost half were High or Critical, which shows how often the absence of a score conceals real exposure.

Alignment across sources is weak even when severity scores exist. Only 19% of CVE severity categories matched Sonatype’s analysis. 62% overstated severity. Others understated it and left meaningful risks unrecognized.

These inconsistencies influence scanners, SBOM processes, and automated remediation tools. When inaccurate data shapes automated decisions, errors spread quickly. Sonatype identified nearly 20,000 false positives and more than one 150,00 false negatives. False positives waste time. False negatives allow vulnerabilities to remain in production. Both outcomes reduce confidence in the data used across security programs.

Slow scoring breaks response cycles

Timeliness is another major gap. The average delay between public disclosure and NVD scoring in 2025 was 6 weeks. Some CVEs waited more than 50 weeks. Proof of concept exploits often appear within hours. Maintainers release patches within days. A score that arrives weeks later adds little value for rapid response.

The slowdown in 2024, when NVD output dropped for several months, showed how fragile the scoring pipeline has become. Even after operations resumed, the backlog did not recover.

The consequences reach beyond security teams

Compliance processes assume that CVE data provides a complete record. Build systems allow or block components based on CVE information. Strategic metrics, such as mean time to remediation, rely on severity categories that may not reflect real risk.

Recent supply chain incidents have shown these gaps in plain view. During events like Log4Shell and XZ Utils, the community understood and mitigated the issues before official scoring became available. The threat environment rewards fast interpretation. The current system was not built for that pace.

“The CVE program was never built for the scale and speed of modern software development. That has been the case with open source, and is even more true with AI. Vulnerability intelligence must shift from indexing what someone assigned yesterday, to delivering real-time insight into what’s actually running in your environment,” said Brian Fox, CTO of Sonatype.

How data quality breaks down

The report outlines several causes behind the inconsistencies. Some maintainers publish broad affected version ranges because it is easier than tracing exact boundaries. Others exclude older versions because those releases are no longer supported, even though many organizations still use them.

Researchers may also publish CVEs quickly and move on. Once the identifier is assigned, there is little incentive to refine version ranges or scoring details. These individual shortcuts add up and create inaccuracies.