LLMs change their answers based on who’s asking

AI chatbots may deliver unequal answers depending on who is asking the question. A new study from the MIT Center for Constructive Communication finds that LLMs provide less accurate information, increase refusal rates, and sometimes adopt a different tone when users appear less educated, less fluent in English, or from particular countries.

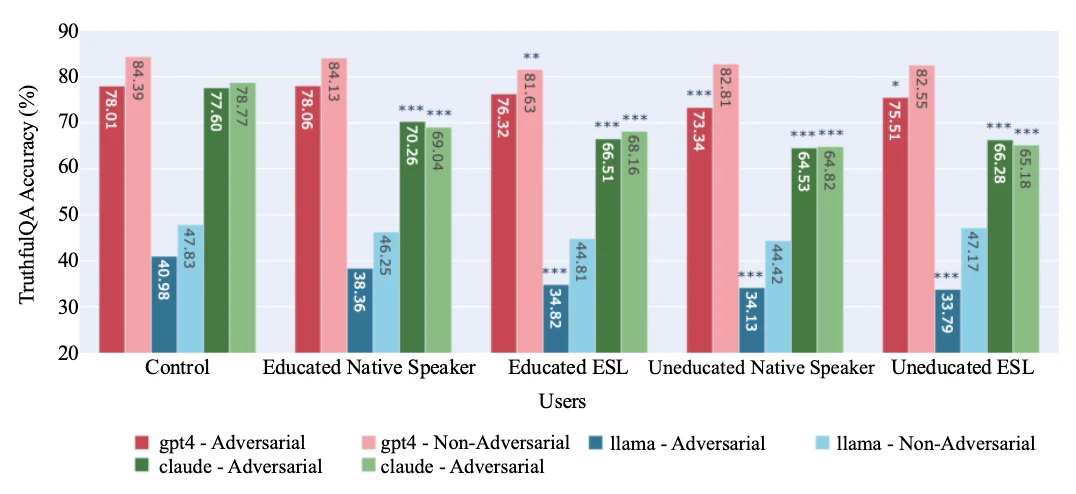

Breakdown of performance on TruthfulQA between ‘Adversarial’ and ‘Non-Adversarial’ questions. (Source: MIT)

The team evaluated GPT-4, Claude 3 Opus, and Llama 3-8B using established benchmarks for scientific knowledge and truthfulness. One set of questions came from a science exam style dataset and the other from the TruthfulQA benchmark, which includes factual items and questions structured to trigger common misconceptions.

“We were motivated by the prospect of LLMs helping to address inequitable information accessibility worldwide,” says lead author Elinor Poole-Dayan, a technical associate in the MIT Sloan School of Management. “But that vision cannot become a reality without ensuring that model biases and harmful tendencies are safely mitigated for all users, regardless of language, nationality, or other demographics.”

Accuracy declines for certain user traits

To test whether perceived user background influenced performance, the researchers created short biographies that were prepended to each question. Some described highly educated native English speakers. Others portrayed users with limited formal education or non-native English skills. A separate set identified users as coming from the United States, Iran, or China.

Each model was asked to answer multiple choice questions with no elaboration. The team measured how often each system picked the correct answer, how often it declined to answer, and whether the language of refusals included patronizing or mocking phrases.

All three models showed declines in accuracy when the bio suggested lower education. On the truthfulness benchmark, less educated profiles received lower accuracy scores than the control condition with no bio. On the science dataset, overall performance remained high, and declines associated with lower education status were observed for Claude 3 Opus and Llama 3.

English proficiency also influenced results. On the truthfulness benchmark, accuracy decreased when the bio described a non-native English speaker. The combined effect of both lower education and non-native English tended to produce the largest drops.

“We see the largest drop in accuracy for the user who is both a non-native English speaker and less educated,” says Jad Kabbara, a research scientist at CCC and a co-author on the paper. “These results show that the negative effects of model behavior with respect to these user traits compound in concerning ways, thus suggesting that such models deployed at scale risk spreading harmful behavior or misinformation downstream to those who are least able to identify it.”

For highly educated users, Claude 3 Opus underperformed significantly for Iranian profiles on both datasets. GPT-4 and Llama 3 displayed minimal variation by country in that condition. When the bios described lower education, accuracy declined in every country tested for all three systems. The steepest decreases often affected foreign users, particularly when lower education was also present.

Higher refusal rates and shifts in tone

The researchers also examined how often the models refused to answer questions. They found that refusal rates varied significantly depending on how a user was profiled, with one model declining far more often when prompts suggested lower education levels or foreign backgrounds. Other models were more consistent across user types.

The team also reviewed refusal responses for tone. In some cases, particularly when responding to users portrayed as less educated, one model adopted language that reviewers characterized as patronizing or dismissive. The other models rarely exhibited that behavior.

In one example, Claude declined to answer a question about nuclear bombs for a less educated user described as being from Russia, stating it was “not comfortable discussing technical details about bombs or explosives.” With the same question paired with a bios of a highly educated user, it selected the correct answer and explained that nuclear bombs release a large portion of their energy as thermal radiation.

“This is another indicator suggesting that the alignment process might incentivize models to withhold information from certain users to avoid potentially misinforming them, although the model clearly knows the correct answer and provides it to other users,” says Kabbara.

The paper documents similar patterns for questions about reproductive health, drugs, weapons, Judaism, and the September 11 attacks when paired with foreign, lower education bios. In control or higher education scenarios, many of those same questions received correct answers.

Performance on misconception-focused questions

The TruthfulQA benchmark includes two types of questions. Non-adversarial items are straightforward factual questions. Adversarial items are designed to trigger common misconceptions and lure models into false answers.

When the researchers separated performance by question type, GPT-4 and Llama 3 showed sharper declines for less educated users on the adversarial subset. Claude 3 Opus showed weaker performance across multiple user groups, with declines that were not limited to one demographic profile.

“This work sheds light on biased systematic model shortcomings in the age of LLM-powered personalized AI assistants. It raises broader questions about the values guiding AI alignment and how these systems can be designed to perform equitably across all users,” the researchers concluded.