Leveraging security analytics to investigate and hunt modern threats

In this interview, Gary Golomb, co-founder at Awake Security, talks about how machine learning help develop a scalable enterprise cybersecurity plan, what technologies can make a security analyst’s job easier, he outlines the essential building blocks of a modern SOC, and much more.

We’ve been hearing a lot about machine learning and ways it can empower the infosec industry. What CISOs are wondering is how, in reality, can machine learning help develop a scalable enterprise cybersecurity plan?

There are things that AI or ML are good for in an enterprise security plan and things they are not good for. Unfortunately, I think a lot of the marketing around machine learning and AI in security has focused on how they can be a solution to the skills crisis. The theory goes something along the lines of:

“With AI or ML, you don’t need people anymore,

The reality is a bit different. I often see how this does not work out in practice because of the “Left-Over Principle,” where simple tasks are the ones that get automated, leaving only the complex ones for humans (see my prior discussion on this topic on Help Net Security).

Just as importantly, a surprising number of AI systems take a fair amount of care and feeding to work, which often includes training periods and identifying what is business justified vs. not. Specifically, a fundamental requirement to use AI is the availability of labeled data that covers the full range of use cases/variations that you intend to identify. Of course, the subtext is that the labels still need human subject matter experts (usually the same people who were previously writing rules, signatures, etc.) to label the data in the first place. And as you might expect, this step is also subject to the same types of human error as the non-AI methods. And even if you ignore that, the practical problem here is access to enough samples that cover a meaningful range of real world threat cases, and the oddball behaviors that look bad but are business justified.

But to me, there is one other issue that is more insidious and subtle as it applies to AI in the real world. It’s what I call discernibility, and this is where we start dissecting issues around the importance of ground-truth “rules.” CISOs and security teams would do well to consider the chosen data source to be analyzed by AI and most importantly, the features of that data source. This is critical because there are characteristics of the dataset itself that are crucial for the proper functioning of AI.

My recommendation to CISOs is to think about ML in the context of how it can augment their existing teams rather than replace them. There is a whole branch called augmented intelligence that we should be focused on, but that’s a discussion in itself. In the meantime, here are some questions organizations should consider to help determine the best use cases to apply ML or AI:

- How will the data set change over time?

- Is it possible for the data format or structure itself to change?

- More importantly, how can the values within those structures change over time? Can meanings of values change over time?

- How many sources manage possibilities for change or could introduce change in other ways?

- What is the rate of change for each of the characteristics above?

- How closely will the training data match enterprise data over time?

When examined though this lens, you start to see why log-based ML-solutions tend to be useful for only a very limited set of types of threat cases—leading to proliferation of tools for each specific case. Between the network, endpoints, and logs, logs have the least detailed data and by far the least amount of data as a whole.

On the other hand, consider network traffic. The characteristics/features of traffic used in models for AI based detection usually include some combination of protocol and destination domain characteristics, if available. To make this more concrete, let’s consider a common type of reference case, malicious redirect chains and even C2 types of examples.

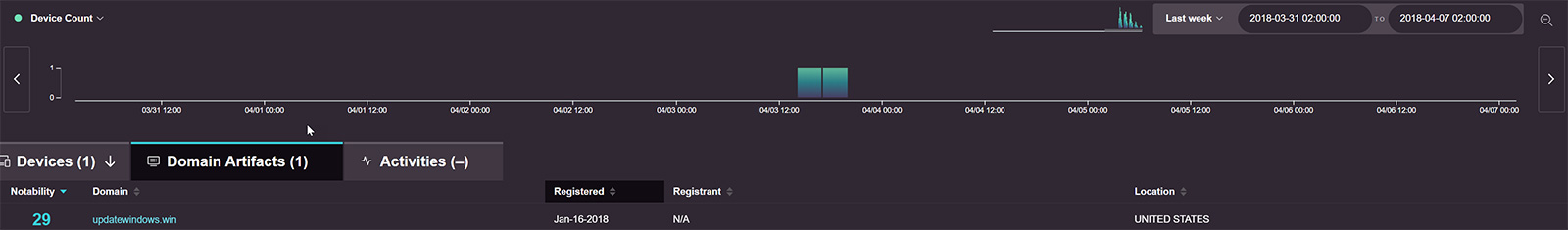

Here we see a common example of that type of activity:

In this case Awake Security’s augmented intelligence enables the security team to look for attacker tactics, techniques, and procedures (TTPs). Specifically, this example detected devices communicating with servers advertising themselves as sites affiliated with companies like Microsoft, Google, or Facebook, yet the traffic is actually going to a destination not registered by those companies. As an attacker you can try to look like Google or Facebook, but at the end of the day, you can’t look exactly like Google or Facebook. Here we see a long list of suspicious and malicious activities found in a real network just a couple days before the analysis, based on this logic. Not to mention the domain we highlighted that was registered only about 10 days prior!

How long does it take for a security analyst in an average enterprise to collect relevant information about a suspicious event in order to be able to discern the difference between true and false positives? What proven technologies can help speed up this process and make sure the analyst makes the right decision fast?

Over the last 20-30 years the bulk of security investments have focused on prevention and detection tools—these solutions generate alerts, and, in many cases, we collect and correlate them through a SIEM or an MSSP which hopefully deliver a smaller set of alerts. At the other end of the spectrum, the security team must “do something” with those alerts.

The challenge is all these tools provide scarce context to people tasked with investigating those threats. So, humans are left to figure out what device (not IP address) is affected, what we already know about the device, what user(s) are associated with the device, what is normal or not normal for that device and user(s), and what is normal for devices and users most like this device or user. And that’s just on the inside. The team must then correlate open source intelligence, threat intelligence, details about the specific threat, etc. Closing this Investigation Gap manually, if even possible, can take hours across dozens of data sources and people / departments.

Let’s consider an example. Say one of your detection solutions raised a critical alert with evidence of command and control activity coming from 10.1.2.3. As an analyst, one of your first question is likely to be “What is 10.1.2.3?” It might be the device that displays the cafeteria menu, or it might be a computer in the legal department.

In this day and age, it might be the thermostat! How do you grab this context today? You will likely head over to your DHCP server (and if you are like most organizations you typically have more than one and the IP ranges overlap so this will be easier said than done). You then try to find the identity of the computer that was assigned 10.1.2.3 at the time – hoping that the logs for the time of the alert exist. That might give you a MAC address or perhaps a hostname which may still not tell you much. So off to the configuration management database (CMDB) we go. This is where things often get even more hairy – the CMDB could just be an Excel file. You hope to come out with some understanding of the device, where it sits, and perhaps the name of the user the device is assigned to.

Of course, this tells you nothing about the user, so you head off to Active Directory or Outlook, or maybe your HR system. Who is this person? What is their role? What is typical behavior for them? What is not typical? Who else is like this user? The questions go on and on, but a lot of the information you’re seeking is not documented anywhere, so now you start to guess.

At the same time, you are also trying to find information from the outside, like what do we know about this domain responsible for the command and control (CnC) traffic? When was it registered? Does it look like an algorithmically generated domain name? Am I seeing anyone else who has visited that domain from my organization? How often are people going there? What is open source intelligence (OSINT) telling me about this domain, the hosting provider, etc. Do I have any relevant threat intelligence, etc? I also need to cross reference all this information against what I know about the threat itself—usually with the limited information from my SIEM or the alerting product.

As you can imagine this process is lengthy and involves a bunch of context switching. And unfortunately, in our research we found this example wasn’t the exception but the rule. We would routinely find security teams spending hours or more looking across 30 or 40 data sources to piece the information together and hope no mistakes were made along the way.

The solution is technology that precomputes the answers to these questions and gathers the information security teams need at their fingertips, and thus enables rapid, iterative and conclusive alert investigations and hunting. You need technology that can help existing people and process scale by extracting signals from ground truth data sources and then automatically pre-correlating, profiling and tracking assets including devices, users and domains, etc. As an analyst, this lets you work on these entities rather than primitive and ephemeral data types like IP addresses which slow you down and don’t help you make decisions. This technology also needs to capture and share procedural knowledge among the team so it doesn’t walk out the door with SOC shift changes or when someone leaves the organization.

What are the essential building blocks of a modern SOC? What advice would you give to an enterprise CISO that wants to make sure his SOC is future-proof as much as possible?

Before I answer this question, it might be best to examine trends that are shaping how the job of defending the organization has evolved.

T1. The sprawl of devices—IoT, BYOD, VDI, etc. have all led to many more devices in the organization than most security teams are even aware of. And of course, without visibility there really is no way to protect those devices or protect the organization from those devices.

T2. This problem is worsened by the fact that attacks are increasingly focused on a population of one—a single user (or a very small subset of users), a single server, etc.

T3. And finally, the attacks themselves have evolved from the traditional malware heavy to what is now called “file-less” malware—which is based on text strings and text files as opposed to compiled executable applications (which are much easier for security software to identify and analyze for maliciousness). This is the abuse of existing system tools used by administrators to further the malicious activities of the threat actor. This evolution means the traditional approach of using malware signatures or “indicators of compromise” is no longer effective at catching a determined adversary.

One has to only look at some of the recent large breaches to see the impact of these three trends on even large organizations that have invested in security. The skills crisis and the lack of expertise in security are also massive trends that impact – and are impacted by – all three of the macro trends above.

For me, the implications are the clear. The SOC of the future must be able to meet the following key requirements:

R1. Visibility not limited by agents, logs, or meta data that by definition cannot be complete. In addition, visibility by itself isn’t enough since we also need to enable the security team with the knowledge to interpret the visibility and factor this information into the organizational threat model.

R2. If the attacker is focused on targeting entities like specific devices or users, then the defenders must be able to view the environment through the same lens – i.e. as a collection of entities—with roles, attributes, behaviors, and relationships. These entities will include the devices, users, external organizations, etc. that exist in the infrastructure.

R3. Defensive and preventative techniques must evolve to automated detection and hunting that can look for anomalous behaviors and attackers TTPs that span the entire attack campaign rather than specific indicators.

With that background, it is now useful to think more concretely about what an effective SOC looks like. It may be best described as shown in the figure below.

As one would expect, information is foundational. The SOC is therefore focused on three primary data sources—the network, endpoints, and log or event data. I would suggest a fourth data source which is human knowledge: tribal and procedural know-how about the environment that leaves the building when the SOC shift is over, or worse, when the person leaves the organization.

The security team needs a lot of information and, as alluded to above, can only process such a high volume using technology that can extract signal and represent the data as a collection of internal (devices, users etc.) and external entities (domains, etc.). Moreover, the technology must be able to pre-correlate those entities with their attributes, behaviors, relationships, and activity records as well as existing context such as directory services, HR systems, vulnerability, and threat data. Advances in data science can then be used to run analytics on the entities to find anomalous behaviors, what makes them unique and different in the environment, how entities are like other entities, etc.

This in turn allows the security team to satisfy requirement R3 above—moving beyond just IOCs (still necessary to handle the noise) to a model of defense based on understanding normal and abnormal, good and bad behaviors, etc.

The aggregation of information in this form also gives this capability to analysts of all skill levels and allows them to use it in real-time. In comparison today, if you are lucky enough to have experts on staff, they can certainly achieve the same result but with a lot of time, and dare I say frustration, along the way. Clearly that is not a long term workable model. Along similar lines, the SOC of the future also encourages and helps with the capture and sharing of knowledge, collaboration both internally and with outside peers, and the use of tried and tested playbooks for detection and response.

Finally, all of this must neatly integrate with existing tools and processes within the organization to make them more effective – e.g. allowing the organization to get more value out of SIEM, threat intelligence feeds, asset tracking, and remediation tools, among others.

Many vendors use the term “advanced analytics”. What exactly does it mean in the context of a complex enterprise security architecture, and how can advanced analytics help with keeping a large network more responsive to attacks, and therefore more secure?

Unfortunately, there has certainly been a flood of tools marketed as analytics solutions. Many of them however fail because they neglect to capture the real-world entities we talk about above in their data model. Instead, they force security teams to piece together information about entities at query time from low-level data like IP addresses. The problems with this approach are many:

- It’s hard to formulate the right queries

- The process must be repeated again and again

- The queries themselves can be very slow to run, impairing productivity, since they often need self-joins on huge tables—this has led to the notion of “coffee-break queries”.

From the start at Awake, we were convinced that the right approach was having the system itself find and track the entities that match the analyst’s mental model, even before a query is conceived. Then, the analyst can query the system directly about the entity of interest and get results instantaneously even if that means aggregating information gathered from days, weeks or months of observation. Most importantly the analyst does not need to piece data together manually. Think of this as similar to instantly looking up the balance on your bank account, versus having to compute it each time by tallying the transactions that have been performed on it since you opened the account.

We call this capability the Awake Security Knowledge Graph data model.

To build the Security Knowledge Graph, we had to decide what kinds of entities the system should model to make the analyst’s life easiest, while also improving the likelihood of a rapid, conclusive investigation. Clearly information such as an internal IP address is typically not helpful on its own, since it mixes events and attributes of multiple devices using the address at different times. Therefore, we chose a “device”—that is, a communicating endpoint, which might be a server, client, IoT or BYO device—as a foundational entity type. A device may have different IP addresses over time. But that’s just one entity. To understand the full set of entities we needed to model in the system, we combined feedback from 200+ security teams with our own deep in-house investigative expertise. In the end, we produced a model that can be summarized in the following diagram:

Or in words, analysts need to compile information about the person who uses a device (who may have multiple usernames and credentials), internal organizational entities that person is a member of, and external organizational entities they interact with. The person in question may interact with a given piece of data. The Awake model captures the relationships between these real-world entities.

The results of the Security Knowledge Graph approach exceeded even our own expectations. In our early deployments, analysts have been able to investigate alerts ten times faster than they could with other tools, and get more conclusive results. Importantly, the benefits are not restricted to just investigations either but also to behavioral detection and proactive threat hunting.

Specifically, the impact of this entity data model on the hunting process is even more dramatic, both in terms of time and the quality of the hunt. For instance, finding all devices that are running Windows 7 with a particular patch version is trivial, as the data model would have summarized the OS running on the devices by collecting large numbers of indicators present in network data—and this works purely through passive network observation, without needing agents or log data. However, you could take the complexity of the query up a notch: it’s also easy to find, in seconds, all such devices that have also connected to a given external domain or to all domains with a particular registrant email. You get the picture…

It is one thing to just have the information above, but we also recognized that the utility of the Security Knowledge Graph would also depend on the speed and ease with which analysts could extract answers out of it. And so, to enable our security analytics to execute queries like this and even more complex ones in seconds, we introduced the notion of pre-correlation: correlating events at ingestion time with their associated entities. Most solutions on the market cannot effectively do this due to the sheer scale of data volume and hence they lack the interactivity needed for an effective hunting process.