AI is changing Kubernetes faster than most teams can keep up

AI is changing how enterprises approach Kubernetes operations, strategy, and scale. The 2025 State of Production Kubernetes report from Spectro Cloud paints a picture of where the industry is heading: AI is shaping decisions around infrastructure cost, tooling, and edge deployment.

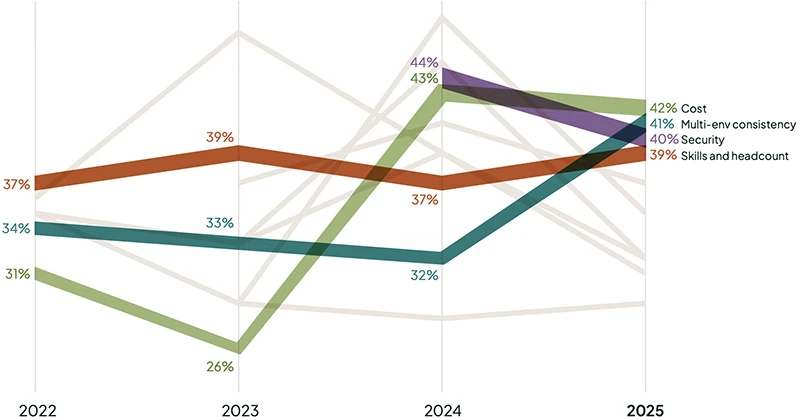

What challenges does your organization face with using Kubernetes in production? (Source: Spectro Cloud)

“This year’s data shows organizations doubling down on AI and edge, even while wrestling legacy VMs into their clusters. The companies that master scale and complexity fastest will create an unbeatable platform for innovation,” said Tenry Fu, CEO, Spectro Cloud.

How AI in Kubernetes operations is changing the game for enterprise teams

The report shows that 90 percent of organizations expect their AI workloads on Kubernetes to grow in the coming year, the highest growth rate of any category. AI is now the third most common factor influencing where Kubernetes clusters are deployed, behind multicloud strategies and on-prem repatriation.

This growth is not limited to internal operations. Business leadership is actively pushing for AI-driven transformation. A cloud engineering manager at a US health and pharma company told, “Our CEO, board members, and the rest of the leadership team are staking the company’s future growth on large-scale adoption of AI tools… it genuinely feels like a gold rush.”

Edge Kubernetes is growing alongside AI

Edge computing has seen steady expansion, with 50 percent of organizations now using Kubernetes at the edge, up from 38 percent the year before. According to the report, 81 percent of existing edge adopters expect continued growth in their edge environments over the next year.

AI is the main reason. Many workloads, such as computer vision or real-time inference, need to run close to the data source. “AI inference workloads that demand real-time decisions — think autonomous vehicles — belong at the edge, as close as possible to the data source,” said a cloud engineering manager. “For low-latency inference, edge-deployed Kubernetes clusters are still the best fit.”

Cost pressure is driving AI investment

Despite increased AI activity, cost remains the top Kubernetes challenge, cited by 42 percent of respondents. Eighty-eight percent said their total cost of ownership has grown in the past year, and 64 percent are feeling more pressure to cut infrastructure costs.

AI is now the most popular strategy for addressing this problem. A slim majority, 51 percent, said using AI to improve operations presents the biggest opportunity for efficiency gains. And 92 percent are already investing in next-generation AI-powered optimization tools.

That includes tools for cost visibility, resource management, and autoscaling. “AI could even generate the YAML manifests,” said one senior technology executive. “You’d chat with it and get the information you need. Could that become a Kubernetes co-pilot like today’s AI coding assistants? Yes, absolutely.”

Caution and skepticism remain

Not all organizations are fully bought in. Some see AI’s potential as limited or politically sensitive. “I don’t see an AI co-pilot for Kubernetes happening any time soon,” said a UK-based senior director of DevOps. “The platform has too many variables that sit outside system control.”

Others are constrained by organizational dynamics. “We’re heavily unionized, and my developers would see an AI Kubernetes co-pilot as a threat to their jobs,” said a CIO at a US public transit agency. “They might tinker with it at their desks, but if I said, ‘We’ll lay off half the team and let AI handle it,’ they’d push back hard.”

What CISOs should consider

For security leaders, AI’s integration into Kubernetes raises both opportunities and risks. It offers tools to optimize infrastructure and scale more effectively, but also expands the attack surface and introduces operational complexity.

The report recommends that platform teams “make sure the right infra options are available, whether that’s a GPU cloud or edge boxes, while controlling costs.” It also urges teams not to treat edge like just another cloud environment, given its unique constraints.