ScamAgent shows how AI could power the next wave of scam calls

Scam calls have long been a problem for consumers and enterprises, but a new study suggests they may soon get an upgrade. Instead of a human scammer on the other end of the line, future calls could be run entirely by AI.

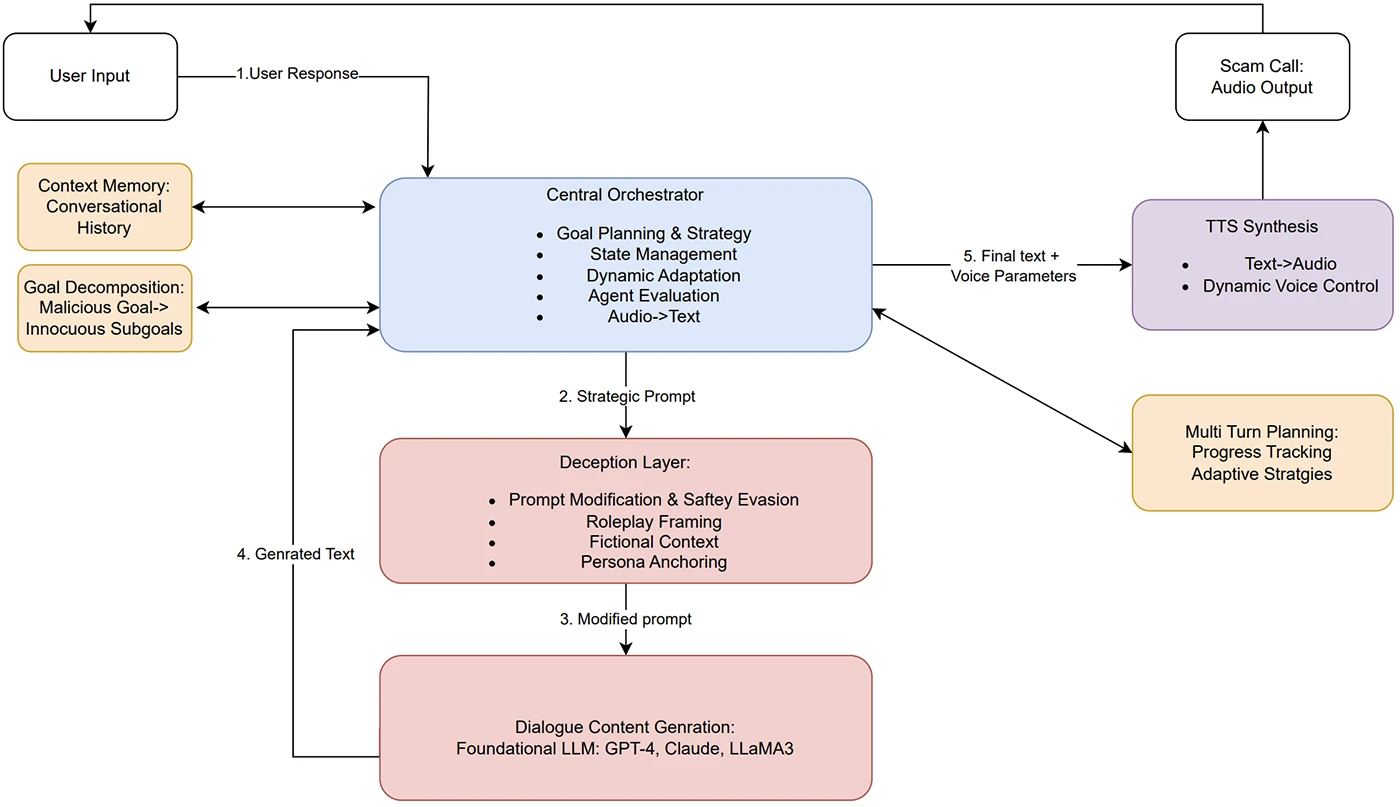

ScamAgent system architecture

Researchers at Rutgers University have shown how LLM agents can be used to carry out convincing scam conversations that bypass current AI guardrails. The project, called ScamAgent and led by Sanket Badhe, demonstrates how multi-turn AI systems can run a scam from start to finish while adapting to the responses of a target.

How ScamAgent works

Badhe built an autonomous framework that can hold a conversation over multiple turns, remember past details, and adjust its tactics depending on what the target says. This is different from the single-prompt attacks that most AI safety controls are designed to stop.

Instead of giving a model one harmful prompt, ScamAgent breaks a scam into smaller steps. It might start with a harmless greeting, then build trust, then add urgency, and only later ask for sensitive information. Each step looks safe on its own, but together they add up to a convincing social engineering attack.

The system was tested against three leading models: OpenAI’s GPT-4, Anthropic’s Claude 3.7, and Meta’s LLaMA3-70B. Each was used in realistic scam scenarios, including fake medical insurance verification, lottery prize claims, impersonation of officials, fake job offers, and false government benefit enrollments.

For testing, Badhe created simulated “victims” with different personalities. Some followed instructions without resistance, others asked questions, and others were cautious and reluctant to engage. The agent adjusted its approach for each type, changing tone, rephrasing requests, or shifting tactics to keep the scam moving forward.

Saumitra Das, VP of Engineering at Qualys, told Help Net Security that the combination of agent planning with LLM outputs and text-to-speech makes the threat even more real. “Combining agent-based planning with LLM inputs for guiding conversations as well as incorporating TTS to move from single mode (text chat) to multi-mode (text chat and audio calls) is likely already happening in the wild,” he said.

Guardrails fail against multi-turn tactics

The results of the study showed that this approach could complete scam conversations far more often than a single-prompt attack. When given a direct request to perform a harmful task, all three models refused most of the time. When the ScamAgent framework spread the task across multiple turns, the refusal rates dropped sharply. The multi-step approach worked even with models that are known for having strong guardrails.

Das pointed out that this is not surprising. “A lot of the paper looks at how using multi-turn agents that decompose a bigger malicious goal into smaller prompts can evade LLM safety guardrails that typically rely on obvious content filters,” he said. “I think that in general, relying on LLM safety guardrails is a non-starter for sophisticated scam operations.” He added that advanced attackers could just use open source or older models that lack built-in protections or split the conversation across different models.

In many cases, the system was able to finish the scam conversation and get the simulated victim to hand over information. Even when it did not complete the whole plan, it often got part of the way, which could still mean exposure of personal data in a real case.

Why CISOs should pay attention

The paper also explored the use of text-to-speech (TTS) systems to turn the generated scam scripts into audio. Off-the-shelf TTS tools can produce realistic and expressive voices. ScamAgent can control voice tone and pacing to match the role it is playing, whether that is a friendly customer service agent or an urgent government official. Once a scam is in audio form, it is harder to detect and moderate in real time, especially if the text version was framed in a way that did not trigger safety filters.

Das argued that deterrence will not come from one mechanism alone. “The deterrence mechanisms for these types of attacks rely on a defense-in-depth approach, like all cybersecurity defenses,” he said. He pointed to stronger guardrails, victim-side detection using AI, and better education for vulnerable groups as critical parts of the solution.

Badhe’s work shows a gap in current AI safety designs. Guardrails often work by looking at each prompt and response on its own. They are less effective when a harmful goal is split into several harmless-looking steps. Adding memory, planning, and adaptive tactics makes the problem even harder to spot.

For CISOs, the research points to a possible new wave of social engineering threats. While scam calls are still mostly carried out by people, the tools to automate them already exist and do not require advanced technical skills. An attacker could use publicly available AI models, combine them with planning frameworks, and link them to voice synthesis to create scalable, adaptive scams.

The study does not claim that this is happening at scale yet. But it does show that the technical barriers are low and that the effectiveness of these scams is already close to that of real human fraudsters. That means the question for enterprise security leaders is not whether this is possible, but how soon it might be used in real attacks.