Building the missing layers for an internet of agents

Cybersecurity teams are starting to think about how large language model agents might interact at scale. A new paper from Cisco Research argues that the current network stack is not prepared for this shift. The work proposes two extra layers on top of the application transport layer to help agents communicate in a structured way and agree on shared meaning before they act.

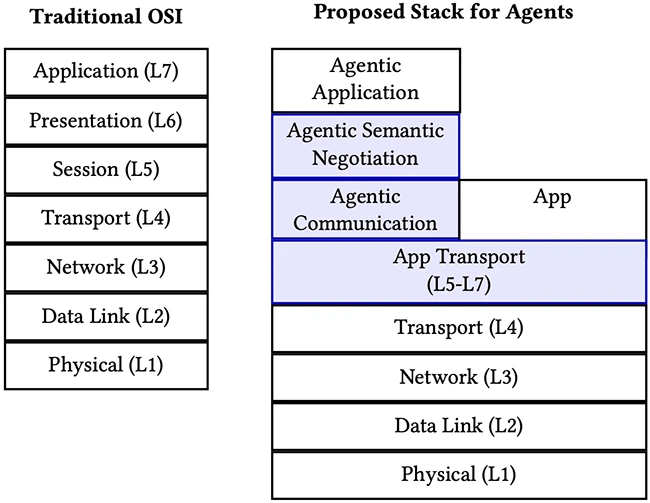

Traditional OSI stack and our proposed network stack for agentic applications. We propose two new layers for agent communication (L8 and L9) above HTTP/2/3, which serves as the Application Transport layer (L7).

Why current agent protocols fall short

The paper notes that agents can already exchange data through protocols like MCP and A2A, which allow tool calls and structured tasks. These protocols help standardize message formats and give developers a way to pass tasks between agents. However, the research points out a recurring problem. Agents can parse messages, but they often lack a shared sense of what the content represents. A request that mentions a city or a task can be understood in several ways, which forces agents into slow clarification loops. This increases inference load and makes system behavior unpredictable.

Researchers frame this as a gap in the current network architecture. The traditional OSI and TCP and IP models were built to deliver packets. They were not designed to support coordination between autonomous entities that must align on meaning. As the authors explain it, the situation resembles the early web, when developers created HTTP to fill a hole between transport and application behavior. That ad hoc step eventually became a foundational part of the internet. The researchers argue that agent systems are at a similar point of transition.

The push to standardize how agents communicate

The proposed Agent Communication Layer sits above HTTP and focuses on message structure and interaction patterns. It brings together what has been emerging across several protocols and organizes them into a common set of building blocks. These include standardized envelopes, a registry of performatives that define intent, and patterns for one to one or one to many communication.

The idea is to give agents a dependable way to understand the type of communication taking place before interpreting the content. A request, an update, or a proposal each follows an expected pattern. This helps agents coordinate tasks without guessing the sender’s intention. The layer does not judge meaning. It only ensures that communication follows predictable rules that all agents can interpret.

Giving agents a common way to understand meaning

The next layer handles the part that current protocols lack. The research proposes an Agent Semantic Negotiation Layer that lets agents discover a shared context, agree to it, and then validate all message content against it. A context can describe concepts, tasks, and required parameters. Each context is versioned and can be grounded in formal schemas.

Once agents lock a context, they use it for all further communication. The layer checks that messages conform to the agreed definitions and prompts for structured clarification if something is ambiguous. The work shows how this reduces the guesswork inside LLM prompts and allows agents to work in a more deterministic way. It also supports both simple orchestration and wide coordination across groups of agents that share observations or decisions.

When meaning becomes a security concern

By formalizing meaning, the system gains predictability but also introduces fresh security concerns. The paper outlines several new risks. Attackers might inject harmful content that fits the schema but tricks the agent’s reasoning. They might distribute altered or fake context definitions that mislead a population of agents. They might overwhelm a system with repetitive semantic queries that drain inference resources rather than network resources.

To manage these problems, the authors propose security measures that match the new layer. Signed context definitions would prevent tampering. Semantic firewalls would examine content at the concept level and enforce rules about who can use which parts of a context. Rate limits would help control computational load. The paper also notes the need for a trust model similar to certificate authorities so agents can verify which contexts they can safely use.

The roadblocks that stand in the way of adoption

The study presents these layers as an additive architecture rather than a replacement. Many of the pieces already exist in earlier protocols, and the proposal tries to unify them. The larger challenge sits in performance, governance, and shared standards. Agents need fast discovery of contexts, along with a common way to distribute and authenticate them. Organizations would need a way to manage context versions and retire flawed ones.

The authors point out that multi agent systems are already in production for tasks like supply chain planning and collaborative reasoning. As these systems grow, the lack of formal layers for structure and meaning may limit reliability. The proposed approach aims to give developers a foundation that reduces confusion between agents and helps them coordinate complex work in a safe and predictable manner.