Bridging the AI model governance gap: Key findings for CISOs

While most organizations understand the need for strong AI model governance, many are still struggling to close gaps that could slow adoption and increase risk. The findings of a new Anaconda survey of more than 300 AI practitioners and decision-makers highlight security concerns in open-source tools, inconsistent model monitoring, and the operational challenges caused by fragmented AI toolchains.

Security concerns remain high despite validation

Open-source software is central to AI development, but it brings supply chain risks that need to be managed carefully. Most respondents have processes in place to validate Python packages for security and compliance. These range from automated vulnerability scans to maintaining internal package registries and manual reviews.

Even so, security remains the most common AI development risk, cited by 39 percent of respondents. Almost two-thirds of organizations have faced delays in AI deployments due to security concerns, with many reporting that time spent troubleshooting dependency issues cuts into productivity. While most teams feel confident they can remediate vulnerabilities, the frequency of incidents suggests current approaches are not enough to keep pace with the scale and complexity of AI projects.

When asked about top priorities for improving governance, the most common response was better-integrated tools that combine development and security workflows. Respondents also pointed to the need for improved visibility into model components and additional team training.

Model monitoring is uneven across organizations

The survey found strong awareness of the importance of tracking model lineage, with 83 percent of organizations reporting that they document the origins of foundation models and 81 percent keeping records of model dependencies. However, not all of this documentation is comprehensive, and nearly one in five respondents have no formal documentation at all.

Performance monitoring shows a similar gap. While 70 percent of respondents have mechanisms in place to detect model drift or unexpected behaviors, 30 percent have no formal monitoring in production. Common practices include automated performance monitoring, alert systems for anomalies, and periodic manual reviews. More mature teams also use A/B testing and retraining schedules, but these approaches are far from universal.

The lack of consistent monitoring can leave blind spots that affect both compliance and performance. As AI moves deeper into production environments, the ability to detect and respond to drift will become a critical governance function.

Fragmented toolchains are slowing governance progress

Only 26 percent of organizations report having a highly unified AI development toolchain. The rest are split between partially unified and fragmented setups, with a small but notable portion describing their toolchains as highly fragmented.

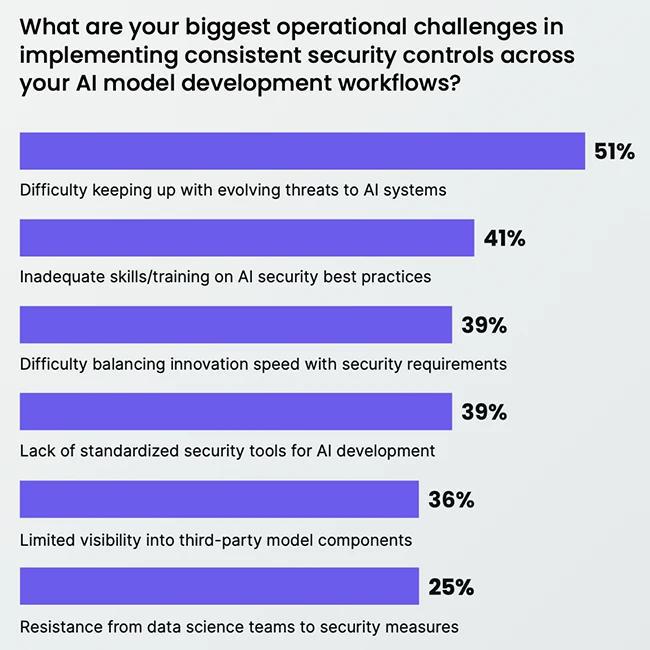

Fragmentation can make governance harder by creating visibility gaps, duplicating effort, and introducing inconsistent security controls. It also increases the risk of shadow IT, where teams use unapproved tools without oversight. When governance processes are layered onto disparate systems, they can become slow and cumbersome, which in turn encourages teams to bypass them.

The survey suggests that unifying tools and processes is also a cultural challenge. Resistance from data science teams to security measures was cited as a key issue by 25 percent of respondents. Integrated platforms that embed governance into everyday workflows can reduce this friction and help align innovation with oversight.