AI’s split personality: Solving crimes while helping conceal them

What happens when investigators and cybercriminals start using the same technology? AI is now doing both, helping law enforcement trace attacks while also being tested for its ability to conceal them. A new study from the University of Cagliari digs into this double-edged role of AI, mapping out how it’s transforming cybercrime detection and digital forensics, and why that’s exciting and a little alarming.

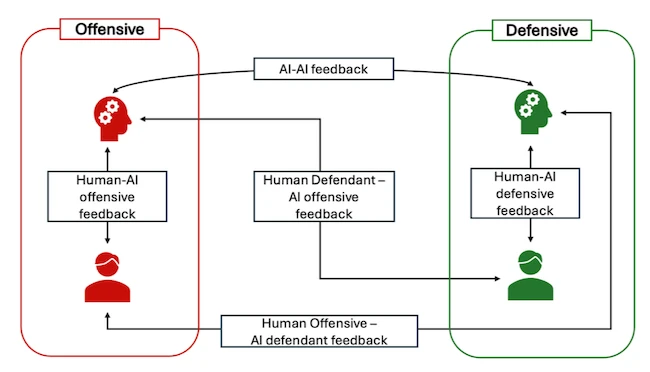

AI system for cybercrime detection based on two models

The shift toward digital forensics

Forensic specialists are expected to stay neutral and explain their findings in a way that non-technical audiences can understand. AI built into popular forensics tools can find relevant content such as images or chat logs while limiting access to unrelated data, improving efficiency. AI also helps limit exposure to distressing material in sensitive cases.

Still, the systems are not perfect. The researchers describe how AI models struggle when confronted with altered media like deepfakes. They also point out that training datasets built from genuine investigations are still too limited. Cooperation across jurisdictions is slow because of differing regulations, which restricts the sharing of forensic insights.

Using AI to detect cybercrime

AI can learn to recognize patterns of malicious activity and spot threats before they spread. These systems need constant retraining to adapt to new tactics and attack variations.

GenAI can create synthetic attack data to make models more accurate. The researchers warn that synthetically generated data should be supervised by human expert analysts to avoid the hallucination problem. Without supervision, AI might produce data that looks valid but lacks substance.

Chatbots powered by LLMs could also support investigators. Properly trained, they could act as simulated online identities to gather intelligence safely.

Since human error continues to be a weak point, the study promotes more realistic training. Capture the Flag exercises teach teams how to detect and contain simulated attacks. GenAI can create new scenarios or act as the opponent, creating a loop between defensive and offensive AI with humans that strengthens systems and staff.

GenAI raises new questions about authenticity

The rise of GenAI has created new doubts about the authenticity of digital evidence. Some defendants have already claimed that videos or images used in court were artificially generated. The study suggests that watermarking or metadata tagging could help identify whether files were created with AI.

Another approach is deep structural analysis, which compares the fine details of digital media such as pixels, sound frequencies, or frame timing. These patterns can reveal inconsistencies that signal synthetic content. Data from such analysis can also be used to train forensic AI models to recognize false media more accurately.

Ethics, privacy, and human factors

AI systems trained on investigation data must protect privacy and prevent bias. The study calls for strict anonymization, secure data storage, and collaboration between international agencies to ensure balanced and representative datasets.

Psychological understanding is also important. Knowing how people respond to phishing or social engineering attempts can help shape stronger defenses.

Studying how attackers plan and act can also help train AI to anticipate and recognize those behaviors.

How AI fits into forensic work

Digital forensics follows four main stages: collection, examination, analysis, and reporting. The study outlines how AI could assist each one.

In the collection stage, computer vision could help investigators locate and document devices at a scene, automatically recording them for the chain of custody.

In examination, LLMs could act as assistants, helping analysts follow procedures and document each action.

During analysis, machine learning could uncover hidden data and identify anti-forensics methods such as steganography.

In reporting, AI could create readable summaries for legal audiences and clarify technical terms.

The researchers emphasize that human oversight is essential. Analysts must confirm every conclusion before presenting it in a legal setting.

Airia: Easily build and deploy secure, intelligent workflows