Waiting for AI superintelligence? Don’t hold your breath

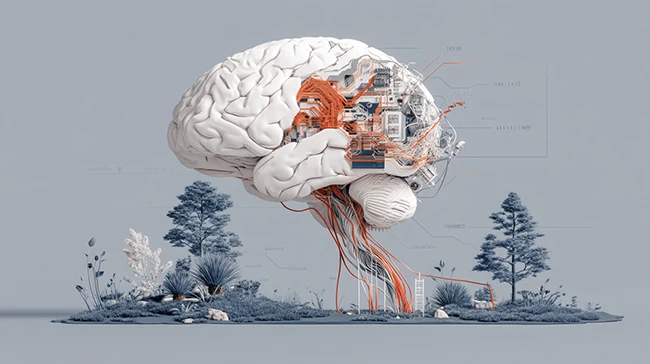

AI’s impact on systems, security, and decision-making is already permanent. Superintelligence, often referred to as artificial superintelligence (ASI), describes a theoretical stage in which AI capability exceeds human cognitive performance across domains. Whether current systems are progressing toward cybersecurity superintelligence remains uncertain.

AI superintelligence: Benefits and risks

ASI is still a theoretical concept, but it is often discussed in the context of future advances in technology. That is why questions about control and what it could mean for people and society keep coming up.

It is often described as a kind of tireless, extremely capable intelligence, more like a near-perfect supercomputer with the ability to process large volumes of information at high speed and precision. With that kind of capability, people imagine ASI helping humans make better decisions and tackle problems across healthcare, finance, research, government, and other industries.

It could also reduce mistakes in areas where errors are costly, such as software development and risk management. Dropzone found that AI agents help analysts work faster and more accurately during alert investigations, without requiring major changes to existing workflows.

At the same time, wider use introduces security threats and operational risks. Systems with very high capability may be difficult to supervise or predict. Some analysts warn that AI could develop self-directed behavior, leading to outcomes that were not planned and that could affect public safety and long-term stability.

In military and defense settings, this technology could support the development of autonomous weapons and increase the destructive scope of conflict. It could also be used for surveillance, large-scale data collection, and cybercrime. An attacker used the agentic AI coding assistant Claude Code for nearly all steps of a data extortion operation that has targeted at least 17 organizations in a variety of economic sectors.

AI slips into the backbone of enterprises

AI is spreading faster than any major technology in history, according to a Microsoft report, and enterprise environments are feeling the impact in real time. Adoption has outpaced many security programs, embedding AI directly into daily workflows, industrial systems, and core infrastructure. With some AI services reaching hundreds of millions of users every week, these tools have become part of the operational fabric.

That expansion also explains why conversations about advanced and even superintelligent AI are no longer confined to science fiction. The idea of systems that outperform top humans across nearly every form of reasoning is increasingly treated as a near-term possibility, with industry leaders such as OpenAI CEO Sam Altman and Meta CEO Mark Zuckerberg publicly suggesting it could arrive within the next few years.

Technology leaders express differing views on the future development of superintelligence. Some argue for stronger regulation or question whether efforts to build conscious AI should continue at all.

A prominent AI researcher recently pushed back earlier predictions about existential risk, placing truly autonomous and self-improving systems further down the timeline.

“Maybe the best AI ever is the AI that we have right now. But we really don’t think that’s the case. We think it’s going to keep getting better,” said Jared Kaplan, Chief Science Officer at Anthropic.

Security spending patterns reflect that sense of acceleration. Arkose Labs reports that a growing share of security budgets is being directed toward AI-powered monitoring, detection, and response, driven by the belief that human-led defenses cannot match the speed of threats that already operate at machine scale.

Underlying all of this is a shared concern among researchers about how quickly AI capabilities are advancing. Systems are improving so fast that existing defenses often struggle to adapt before new risks emerge, widening the gap between innovation and protection. According to Aikido Security, most organizations use AI to write production code, and many have seen new vulnerabilities appear because of it.

Human involvement remains central to cybersecurity AI superintelligence

Recent academic research argues that the future of cyber defense will rely on AI systems operating under human supervision instead of complete automation. Cybersecurity tools have progressed from AI that supports human experts to systems that automate tasks and operate at machine speed, followed by models that incorporate structured strategic reasoning. The findings show that advanced AI can match or exceed human performance in speed and tactical execution, while strategic reasoning and governance depend on human-defined objectives, constraints, and oversight.

Progress toward ASI depends on the development of Artificial General Intelligence (AGI), systems that can understand the world and solve problems with the same flexibility people do. Getting there would likely require progress in several areas, including LLMs trained on large datasets to work with language, multisensory AI that can handle text, images, and audio, more advanced neural network designs, neuromorphic hardware inspired by the brain, algorithms based on evolutionary ideas, and AI systems that can generate code.

Ultimately, whether or not cybersecurity ever reaches true superintelligence, organizations must assume AI-driven systems will continue to outpace human speed and scale, making governance, human oversight, and resilient security design essential.