Agentic AI puts defenders on a tighter timeline to adapt

Security teams know that attackers rarely wait for defenders to be ready. The latest AI Maturity in Cybersecurity Report from Arkose Labs shows how quickly the threat landscape is shifting and how slowly organizations can respond in comparison. Attackers test new automation, defenders invest in new tools and the timeline between the two keeps shrinking.

Threats expand while defenses hold steady

Automated tools, human driven fraud activity and early forms of agentic AI all appear in similar proportions. The distribution suggests attackers have a broad range of options they can switch between as soon as a control becomes effective. No single defensive layer blocks enough activity to give teams breathing room.

The majority of organizations report heavy losses even after raising their defensive investments. The report urges security leaders to think in terms of revenue impact rather than nominal losses and to focus on reducing the compounding effects that appear when financial, operational and reputational issues interact.

While the technology is not yet mature in adversarial contexts, it is evolving quickly and gives attackers a way to automate planning steps that once required human effort. Security teams that rely on long project cycles risk missing the early window to adjust.

Spending grows faster than impact

Enterprises are expanding their AI centered security budgets at a faster pace than expected. Many teams dedicate a large share of their overall security investment to AI powered monitoring, detection and response. This reflects both urgency and a belief that automation is the only viable answer to threats that operate at machine speed.

Organizations want their teams to understand AI based techniques well enough to operate and tune the tools they deploy. Enterprises have already started structured training programs for their staff. Despite this push, measurable improvements remain uneven. About half of organizations see gains from AI driven bot defense, threat detection or phishing protection. The other half continue to struggle with integration, tuning or operational alignment.

This lag shows why confidence levels in AI capabilities can rise even while outcomes remain flat. Teams feel better equipped because they have new tools and new training, but the full value appears only when deployments are mature.

Vendor strategy shifts toward adaptation rather than features

There is a notable shift in how enterprises evaluate vendors. Instead of focusing on feature checklists, they prioritize the ability to adapt to new threats, produce timely intelligence and adjust defenses without long upgrade cycles. Organizations see vendors as operational partners rather than external suppliers. This change reflects pressure from attackers who adjust faster than internal teams can build or customize new capabilities.

Deployment friction continues to fall, which raises the value of acquired tools and makes enterprises more willing to buy instead of build. This trend is strongest in areas where adversaries are already experimenting with automation that traditional tooling cannot observe or stop.

Agentic AI drives the next preparedness gap

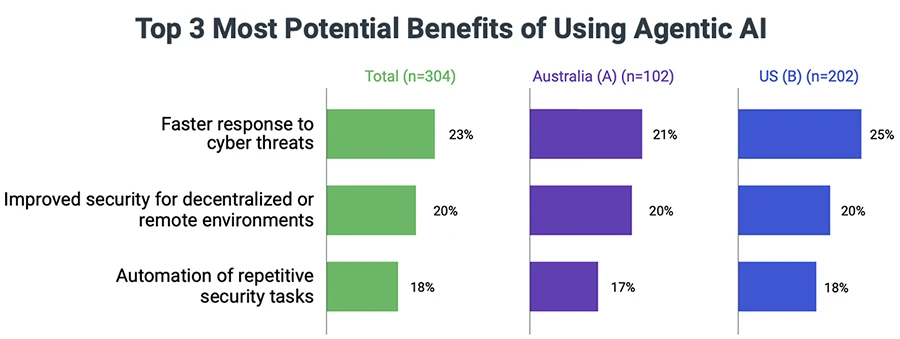

Adoption on the defensive side is high, since teams use it for faster response, pattern analysis and workflow execution. But defensive maturity around malicious agentic AI remains far behind. Organizations use agentic AI to enhance their own security operations even though they have not yet built controls that detect or restrict hostile autonomous activity.

Security leaders believe agentic AI attacks will reach critical impact in a short timeframe. This expectation drives hasty deployments and creates a strategic dilemma. Teams can either deploy imperfect solutions or wait for complete evaluations and risk giving attackers an advantage in the meantime. The report indicates that organizations choose early deployment and intend to refine their strategy over time.

At the same time, concern about adversarial use is widespread. This distinction matters. Providers can secure their own tools, but they cannot prevent attackers from assembling their own agents using public models or custom training data.

Know your agent required a needed control

Consumer controlled automation is growing, which forces enterprises to separate legitimate agent activity from malicious impersonation. Nearly all respondents say this distinction is important. The details vary across organizations, but most require some form of authorization or oversight for agent activity initiated by customers.

Signals that distinguished bots from humans do not apply when automation is legitimate. Security teams instead face questions about permission, identity, provenance and scale. These questions form the basis of a new category of controls often described as Know Your Agent.

“Most organizations lack the tools to distinguish between legitimate and malicious automation,” said Kevin Gosschalk, CEO of Arkose Labs. “This study underscores the urgency of building robust identification frameworks, as the ability to navigate these challenges will define the leaders of tomorrow’s cybersecurity landscape.”

Teams are building dedicated workflows for agent traffic and expanding monitoring to track how these agents behave over time. The leading challenges involve verifying authorization, identifying impersonation attempts and managing the volume of agent activity as adoption grows. These areas will shape enterprise security design as consumer automation becomes common.