Microsoft spots LLM-obfuscated phishing attack

Cybercriminals are increasingly using AI-powered tools and (malicious) large language models to create convincing, error-free emails, deepfakes, online personas, lookalike/fake websites, and malware.

There’s even been a documented instance of an attacker using the agentic AI coding assistant Claude Code (along with Kali Linux) for nearly all steps of a data extortion operation.

More recently, Microsoft Threat Intelligence spotted and blocked an attack campaign delivering an LLM-obfuscated malicious attachment.

The phishing campaign and the LLM-obfuscated payload

The attackers used a compromised small business email account to send messages, which looked like a notification to view a shared file.

Users who downloaded and opened the file – ostensibly a PDF, but actually an SVG (Scalable Vector Graphics) file – were redirected to a “CAPTCHA prompt” web page and then likely to a page created to harvest credentials.

What sets this attack apart is how the SVG file attempted to hide its malicious behavior: Rather than using encryption for obfuscating the content, the attackers disguised the payload with business language.

SVG files are beloved by attackers because they are text-based and allow them to embed JavaScript and other dynamic content into the file. This file type also allows them to include “invisible” elements, encoded attributes, and to delay script execution, which help them avoid static analysis and sandboxing.

For this campaign, the attackers padded the file with elements for a supposed Business Performance Dashboard, complete with chart bars and month labels. These elements were invisible to the user, as the attackers set their opacity to zero and their fill to transparent.

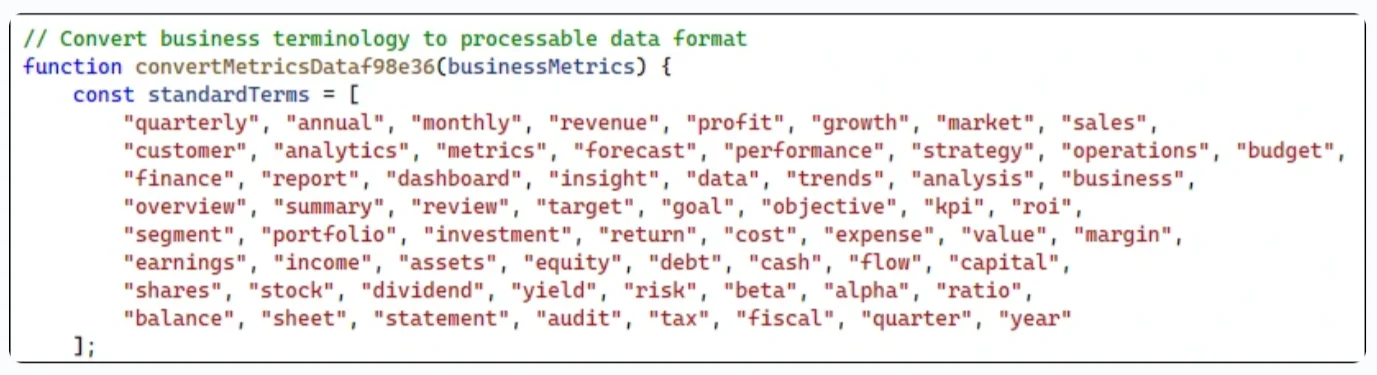

“Within the file, the attackers encoded the malicious payload using a long sequence of business-related terms. Words like revenue, operations, risk, or shares were concatenated into a hidden data-analytics attribute of an invisible

“Instead of directly including malicious code, the attackers encoded the payload by mapping pairs or sequences of these business terms to specific characters or instructions. As the script runs, it decodes the sequence, reconstructing the hidden functionality from what appears to be harmless business metadata.”

Business-related terms converted into malicious code (Source: Microsoft)

The final payload can fingerprint the browser/system and, if the “conditions” are right, redirect the potential victim to a phishing page.

Detecting LLM use could help detect attacks?

Microsoft has used Security Copilot, its AI cybersecurity assistant, to analyze the SVG file and decide whether it was written by a human or AI.

The tool flagged several artifacts that strongly suggested LLM generation, such as overly descriptive variable and function names, an over-engineered code structure, boilerplate comments, unnecessary code elements, and formulaic obfuscation techniques typical of LLM-generated code.

Because of these traits, the threat analysts concluded it was highly likely that the code was synthetic and likely generated by an LLM or a tool using one.

Luckily, blocking phishing attempts involves more than simply deciding if a payload is harmful. Nevertheless, AI-generated obfuscation often introduces synthetic artifacts, and these can become new detection signals, the analysts noted.

It’s therefore possible that the use of LLMs could occasionally make attacks easier to detect, not less.

Subscribe to our breaking news e-mail alert to never miss out on the latest breaches, vulnerabilities and cybersecurity threats. Subscribe here!