The long conversations that reveal how scammers work

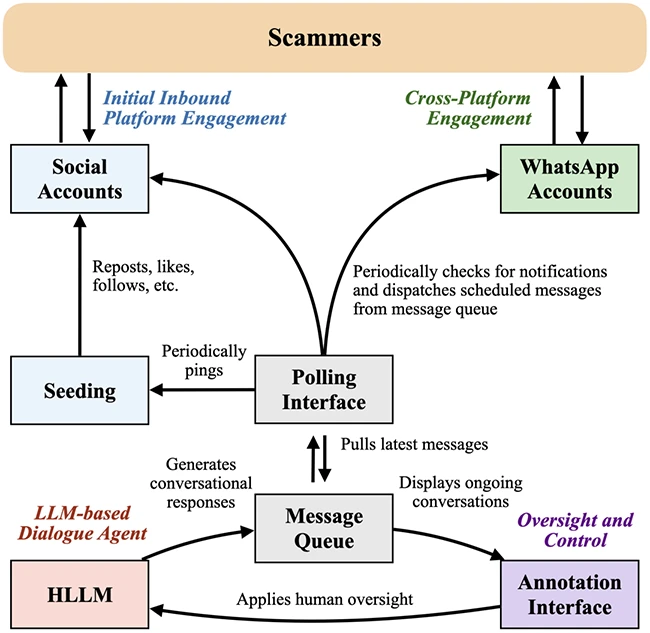

Online scammers often take weeks to build trust before making a move, which makes their work hard to study. A research team from UC San Diego built a system that does the patient work of talking to scammers at scale, and the result offers a look into how long game fraud unfolds. Their system, called CHATTERBOX, uses synthetic personas, an LLM driven conversational engine, and human oversight to gather conversations that stretch across platforms and formats.

CHATTERBOX system architecture

A window into long scam cycles

The project ran for seven weeks and drew in 4,725 scammer accounts. The researchers then held conversations with 568 of them. The average conversation lasted 7.8 days, the longest one stretched to 46 days. Almost half of the conversations exceeded 26 messages, and the longest had 487 messages.

The slow cadence is what scammers use to build trust. The study shows how predictable that progression is when viewed at scale. Early messages tend to focus on small talk, harmless questions, light personal details, and daily routines. These early exchanges often contain subtle checks to see if the target is human. Some scammers ask directly. “By the way, there are a lot of fake people here, are you a real person” is one of the lines captured in the study.

Requests to move platforms come fast. The team reports that most scammers tried to shift the conversation to another service. WhatsApp was the most common choice, and these requests usually appeared around the first 24 messages.

How scammers introduce money

Money does not come up early. The system logged mentions of payment methods, crypto wallets, or financial apps at a median of 75 messages into conversations. That distance between the greeting and the attempted cash out is the core challenge in studying long game fraud. Scammers send photos of meals or walks, talk about family, and bring up current events to lay the groundwork for later requests.

Scammers often sent images, while audio and video were less common, but when used, they tended to appear at moments when scammers wanted to strengthen the sense of presence. The researchers found that 20 percent of conversations included selfie requests, and more than half of those requests took place on WhatsApp.

Screenshots of crypto apps or payment screens emerged close to the point when scammers asked for money. These later stage files often included QR codes or instructions for side loaded apps. One example in the paper walks through an exchange in which the scammer urges the persona to install a trading app from a link, then follows with emotional pressure when the persona hesitates.

How this research supports security strategy

The authors built CHATTERBOX to support research and later analysis, and the patterns they uncovered are useful for anyone who must defend users or employees from social engineering. Long haul scams do not rely on high urgency. They rely on comfort, familiarity, and patience. This is a different challenge than technical support scams or prize scams. Defenders need to detect slow moving risk signals before money leaves accounts.

The study also shows the scale challenge. Manual research that covers weeks of dialog is difficult to sustain. The researchers address this by blending an LLM with a workflow that pulls in human reviewers at key points. That created a steady flow of data with little cost. As the authors put it, “The primary function is to automatically collect unsolicited scam attempts and successfully engage with these parties on an ongoing and controllable basis.” They point out that this kind of system can be used for coaching or training classifiers in the future, as well as for studying how tactics shift over time.

Scammers also adapt, which raises questions about future detection. The team notes they already saw hints of AI use by scammers, including AI generated voice clips and refined outreach messages. As they write, “There is an array of evidence indicating that this scamming labor pool is largely composed of forced labor.”

Download: Strengthening Identity Security whitepaper